Meet the New Africa & UK Accent Translation Models

Accent barriers don’t just cause misunderstandings, they limit opportunity. For people, they can mean fewer job prospects. For companies, they can mean smaller talent pools and uneven customer experiences.

Expanding the reach of Accent Translation helps on both fronts: opening new hiring markets for enterprises, and opening doors to meaningful careers for voices too often excluded.

Each expansion also pushes the boundaries of speech science. Every accent presents unique hurdles — from scarce training data to wide subregional variation — that demand careful technical solutions to ensure intelligibility and fairness.

In our latest Accent Translation expansion, we worked closely with customers to prioritize two important areas:

- African source accents (including South African, Egyptian, Kenyan, Nigerian, Cameroonian, and more), where limited data and high linguistic diversity have historically limited AI performance.

- UK English output accent, giving enterprises serving UK and European markets a familiar, widely understood option alongside American English.

In this article, I’ll take you behind the scenes of how our science team addressed these challenges, validated the AI models with rigorous evaluation sets, and delivered measurable gains in clarity.

To bring the results to life, we’ve included audio examples so you can hear the difference yourself: one set showcasing a range of African accents now available and a second side-by-side comparison of UK and US English outputs for speakers from Africa, India, the Philippines, and Latin America.

Advancements like this are why we keep innovating, because each one opens new doors for people and enterprises alike. Check out the video below, then dive into the article for the full story and more audio samples!

Africa: Expanding Coverage Across African English Accents

One key challenge when building our Africa Accent models is that Africa presents one of the most complex challenges in Accent Translation. The continent is home to an estimated 1,500 to 3,000 living languages — roughly one-third of the world’s total — and by extension, an immense variety of English accents shaped by this diversity. Combined with the scarcity of high-quality training data, this makes building accurate models especially difficult. Data is spread thinly across dozens of subregions, with significant phonetic and linguistic differences between West, East, South, Central, and North African English.

Beyond data scarcity, African English accents also receive minimal representation in global media, which contributes to these accents being less widely understood outside the continent. This lack of exposure is mirrored in the weaker performance of many Automatic Speech Recognition (ASR) systems when processing African-accented speech, where error rates are consistently higher than for more widely represented accents. The result is not just a technical challenge, but a systemic bias in data-driven speech technology.

How We Built Our African Accent Models

To address these challenges, we built a proprietary dataset by curating speech samples from more than thirty African subregional accents across a variety of domains. Because this study was designed for practical, industrial applications, the dataset captures variation in volume, pitch, speaking rate, gender, and business contexts. To ensure unbiased results, it was completely isolated and never used during model training.

This dataset is part of Sanas’ broader proprietary data collection campaigns, built with full participant consent across multiple regions that represents a strategic investment in high-quality speech data.

For this study, Sanas used ASR WER (Word Error Rate) as a tangible, real-world measure of intelligibility. We used a state-of-the-art open-source 1.1 billion-parameter ASR model, parakeet-ctc-1.1b , that was jointly developed by leading AI companies NVIDIA and Suno.ai. Learn more about the AI model here.

Using an open-source model increases the transparency and reproducibility of this study, while demonstrating how Sanas can raise the performance of a leading ASR model.

By focusing on diversity and quality over quantity and applying innovative modeling techniques to maximize the value of limited samples, we delivered significant clarity gains for African English speakers.

Listen to the audio samples below to hear African voices from across the continent with and without Sanas.

South Africa

Egypt

Nigeria

Cameroon

Kenya

Tanzania

Uganda

Note: For this blog post, these source examples for demonstrating Africa are chosen from a mix of proprietary as well as open-source datasets such as Afrispeech-200 and Ugandan English Court Sentences, which contain medical jargon and legal terms respectively. We chose to showcase these sentences for this blog to validate our model’s capabilities on vocabulary in critical technical domains in addition to agent conversations. Please note that none of these have been used for training our models.

UK: Creating a UK Accent Model That Works at Scale

The United Kingdom is home to a rich tapestry of accents. Between Scottish, Welsh, Irish, Estuary, and other Northern and Southern English accent variants, selecting a single "UK accent" is inherently challenging. This diversity means that no single output can reflect every regional variation. Yet, without a clear standard, speech AI risks producing accents that sound inconsistent or unnatural to local listeners.

To address this, we chose to target the modern form of Received Pronunciation (RP), which is widely understood across the UK and often used in media, education, and public life. By focusing on RP, we aimed to create an output accent that would be clear, familiar, and accessible to the broadest audience possible.

How We Built Our UK Accent Model

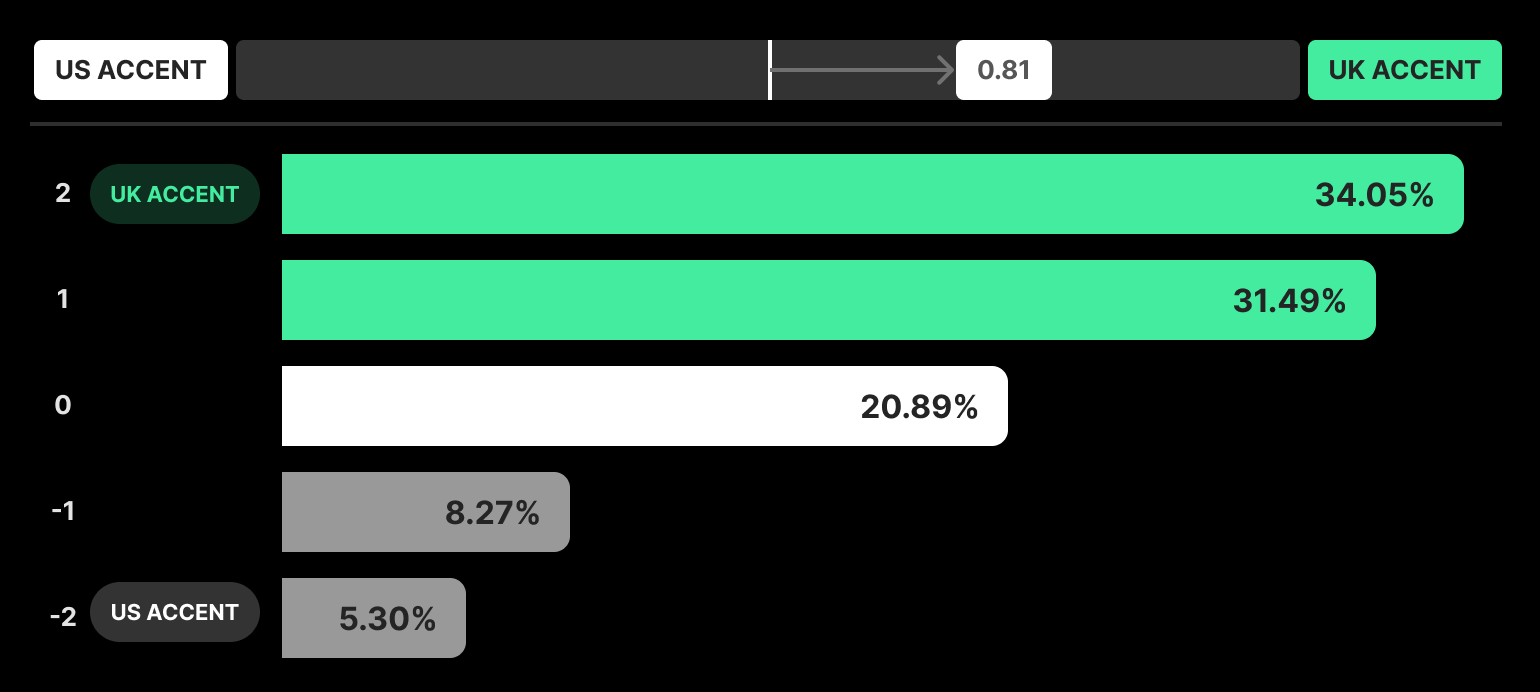

We validated our UK model’s accent accuracy through a large-scale subjective A/B evaluation comparing it to our existing US model to verify the perception of the produced accent.

- 168 audio files were prepared and balanced across accents (India, Philippines, Latin America, and Africa), gender, mother-tongue influence, and rate of speech.

- Evaluations were conducted by independent, blind listeners from the UK. This produced 1,680 total evaluations, ensuring statistical reliability.

- UK listeners preferred the new RP output model over the existing US version by 51.8 percentage points, a clear signal that people respond more positively to hearing speech in their own local accent.

This rigorous evaluation gave us confidence that the RP output is both technically robust and aligned with listener expectations, making it a strong foundation for enterprises serving UK and European customers.

We’ve discussed the challenges and solutions behind expanding Accent Translation, but the clearest way to understand its impact is to hear it yourself. Below, you’ll find side-by-side audio comparisons: the original audio from African, Indian, Filipino, and Latin American speakers, translated into both US English and UK English outputs.

Africa (Male)

Africa (Female)

India (Male)

India (Female)

Philippines (Male)

Philippines (Female)

Latin America (Male)

Latin America (Female)

These side-by-side examples demonstrate the practical outcomes of our modeling and evaluation choices: higher intelligibility, preserved speaker identity, and flexible outputs for both US and UK markets.

Conclusion

Accent barriers are not only a matter of clarity; they are a matter of access. By expanding Accent Translation to cover a broader set of African accents, we enable enterprises to reach new talent pools and create jobs across a rapidly growing region. By introducing a UK output accent, we help companies connect with customers in speech that feels natural and local.

The research is complex, but the result is simple: clearer conversations, better customer experiences, and more open opportunities for skilled voices around the world. With each expansion, Sanas moves closer to fulfilling our mission: ensuring every person can be understood, no matter how they speak.