Meet Sanas Accent Translation AT4.0: Clearer, Faster, More Human

At Sanas, our mission is simple yet ambitious: make every voice heard, understood, and valued, especially regardless of accent.

Today, we’re thrilled to announce the launch of Sanas Accent Translation 4.0 (AT4.0): a generational leap forward in clear communication.

Global enterprises can now further reduce misunderstandings, expand accessibility, and improve both human-to-human and human-to-AI experiences, while keeping voices authentic and heard.

AT4.0 brings transformative improvements across three key dimensions:

- Intelligibility: significantly improves intelligibility and accuracy over its predecessors, even improving state-of-the-art ASR (Automatic Speech Recognition) systems.

- Naturalness & Speaker Similarity: delivers our most natural-sounding accent translation yet, preserving the authenticity of each voice with unmatched fidelity.

- New Lightweight Mode: introduces a breakthrough lightweight mode, delivering high-quality accent translation on lower-end devices while preserving clarity and performance.

Elevating Intelligibility for Clearer Speech

TLDR: AT4.0 sets a new standard in speech clarity, reducing miscommunication and accelerating issue resolution. For enterprises, this means measurable cost savings, higher customer satisfaction, and a more consistent global CX.

For this study, Sanas used ASR WER (Word Error Rate) as a tangible, real-world heuristic for intelligibility. Accordingly, we used a state-of-the-art open-source 1.1 billion-parameter ASR model, parakeet-ctc-1.1b , that was jointly developed by leading AI companies NVIDIA and Suno.ai. Learn more about the AI model here.

We decided to choose an open-source model to increase the transparency and reproducibility of this study, and Sanas is proud to be able to boost the performance of such a leading ASR model.

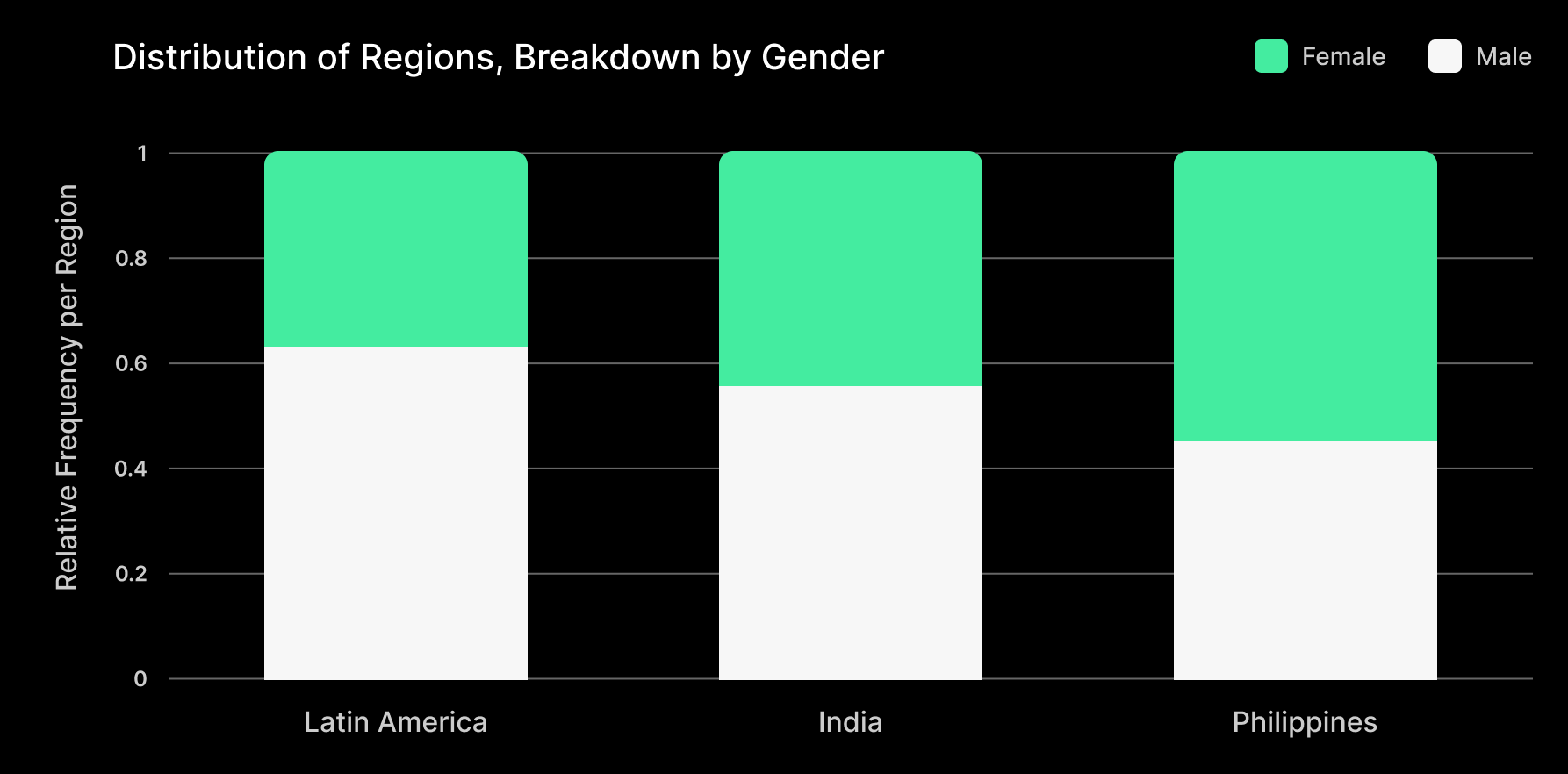

As this study is intended for practical, industrial applications, we conducted it on a proprietary dataset composed of real-world conversations from Indian, Filipino, and Latin American contact center agents balanced across accents, volume, pitch, speaking rate, gender, line of business, etc. To avoid biased results, this dataset has been completely isolated and has not been used during model training. This evaluation dataset reflects Sanas’s dedication to building inclusive technologies without biases for one demographic over another.

More details of this dataset can be found in the appendix below.

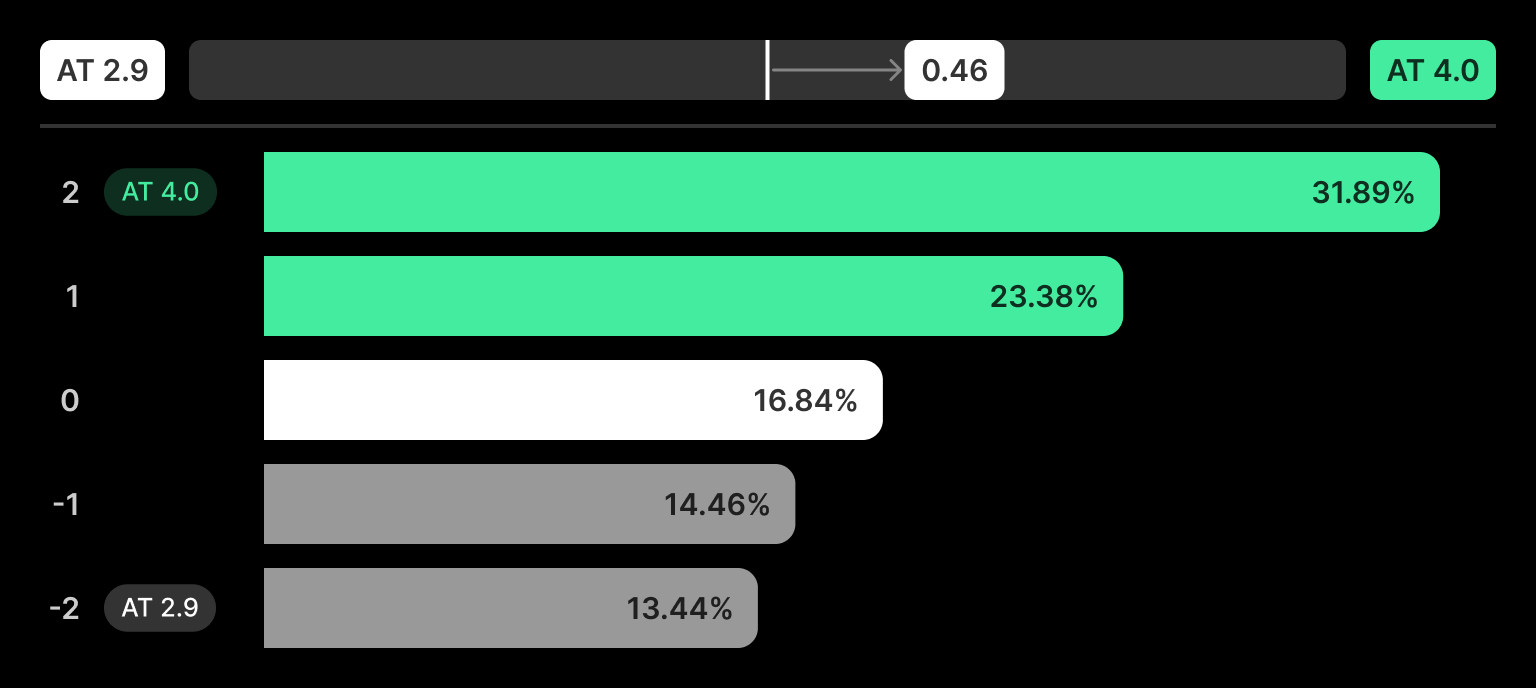

Over its predecessor (AT2.9), AT4.0 demonstrates an:

- Overall: 6.4% relative improvement in WER.

- India: 5.9% relative improvement in WER.

- Philippines: 6.0% relative improvement in WER.

- Latin America: 8.3% relative improvement in WER.

These results mark a clear step forward in speech clarity, setting the stage for even greater advances in ASR performance.

AT4.0 is the First of its Kind to Improve ASR Performance

Sanas has long been known for improving human-to-human communication. We are particularly excited to announce that AT4.0 now brings our speech enhancement capabilities to speech-to-text as well, especially through Sanas SDK (sign up to learn more here).

One of the most exciting breakthroughs in this release is the first-ever comprehensive study that Sanas Accent Translation significantly improves Automatic Speech Recognition (ASR), i.e. speech-to-text, performance.

TLDR: Sanas AT4.0 is the first accent translation system proven to improve ASR accuracy for non-native English speakers, reducing word error rates by an average of 27% for Indian-accented speakers. This breakthrough makes speech technology more inclusive and reliable, unlocking more accessibility and stronger AI performance across industries.

Historically, ASR systems have shown bias: speakers with non-native or regional accents experience significantly higher error rates compared to native English speakers. This results in misunderstandings, misclassifications, and frustration across industries that depend on ASR — from customer experience to AI agents, transcription, healthcare, and beyond. By translating accents, Sanas greatly narrows the ASR’s beam search (i.e. possible transcript combinations) and reduces parallel runs for disfluency detection and time-marking, thereby improving confidence and accuracy.

With our new AT4.0 model, we conducted rigorous tests using a state-of-the-art ASR engine and a comprehensive dataset. The results are clear:

- AT4.0 consistently lowers WER across Indian, Filipino, and Latin American accents.

- 7.7% overall average WER improvement relative to speech without Sanas.

- 27.0% average WER improvement to Indian-accented English relative to speech without Sanas.

- Non-native speakers see the largest relative improvements, reducing inequities in ASR access.

- The improvements are statistically significant, establishing Accent Translation not just as a usability tool but as a validated performance enhancer.

The implications are significant. This is a milestone in Sanas' mission to improve communication any time a human is in the loop by making the speech ecosystem more inclusive and improving comprehension in both human-human interactions and also human-computer interactions.

Interested in learning how AT4.0 can be used in your company's ASR technology? Complete the form for a tailored product walkthrough.

ASR Examples

Ready to see AT4.0 in action? Each of the samples below includes:

- Oracle Transcript or “Ground-Truth Transcript” is the human-verified transcript of the speech, i.e. what was actually said. It serves as the “oracle” or reference answer.

- Original Transcript is the text produced by the ASR system when given the original audio.

- Sanas AT4.0 Transcript is the text produced by the ASR system when given the audio synthesized by Sanas AT4.0.

Transcription errors in the original transcripts are bolded, and corresponding text is bolded in the Sanas AT4.0 transcripts for comparison. All errors are presented verbatim from the ASR model's prediction.

Oracle Transcript | Original Transcript | Sanas AT4.0 Transcript |

“yeah we do have a quite a big database of jobs let me gather some basic information and we can narrow down that list okay” | “yeah we do have quite a big database of jobs let me gather some basic information and we can addr it all in our list okay” | “yeah we do have a quite a big database of jobs let me gather some basic information and we can narrow down the list okay” |

24% WER | 4% WER | |

Oracle Transcript | Original Transcript | Sanas AT4.0 Transcript |

“what jobs are your list we do have a quite a big database of jobs let me gather some basic information” | “what dogs are we on list we do have a quite a big database of dogs let me gather some basic information” | “what jobs are your list we do have a quite a big database of jobs let me gather some basic information” |

19% WER | 0% WER | |

Naturalness & Speaker Similarity

Too often, speech systems sound stiff or robotic. They may get the words right, but they lose the feel of human conversation. AT4.0 now delivers speech with improved intonation, fluid pacing, and emotionally resonant prosody.

TLDR: Your voice is more than sound, it’s part of your identity. It carries your personality, culture, and sense of self. AT4.0 improves the perception of natural conversation and protects speaker similarity while enhancing clarity and fostering genuine connection, not just communication.

We validated these improvements through a large-scale subjective A/B evaluation comparing AT2.9 and AT4.0 to verify advances in naturalness and speaker similarity.

- 168 audio files were prepared and balanced across accents (India, Philippines, and Latin America), gender, mother-tongue influence, and rate of speech.

- Evaluations were conducted through independent, blind American listeners. This produced 3,528 total evaluations, ensuring statistical reliability.

- AT4.0 was selected 27.4 percentage points more often than AT2.9.

By grounding our work in data and human evaluation, we ensure our improvements are not just technical, but also meaningful in real-world conversations.

Hear the difference in the examples below, and see the appendix for additional examples.

Examples

Category | Original | AT2.9 | AT4.0 |

Intelligibility, Speaker Similarity | |||

Naturalness, Noise Robustness, Speaker Similarity | |||

Intelligibility, Naturalness, Speaker Similarity |

New Lightweight Mode

At Sanas, our mission has always been to democratize speech technology, ensuring that accent translation is not only powerful, but also accessible to everyone.

The reality is, not everyone has access to the latest devices or hardware. In fact, many of the people who stand to benefit most from Accent Translation often rely on entry-level computers or thin clients.

Accent Translation Lightweight Mode changes that.

TLDR: Lightweight Mode reduces memory and CPU usage, enabling deployment on older laptops and thin-client setups. Sanas’s Accent Translation solution is the most efficient in the market, expanding deployment flexibility, lowering infrastructure costs, and unlocking access for a wider global workforce.

AT4.0 Lightweight is an optimized variant of AT4.0 that:

- Runs with 35% fewer parameters while maintaining the high quality in intelligibility, speaker similarity, and naturalness.

- Original — Overall Average WER: 15.6% (i.e. without Sanas Accent Translation)

- AT4.0 — Overall Average WER: 14.4% (i.e. better than Original)

- AT4.0 Lightweight — Overall Average WER: 14.4% (i.e. no loss of intelligibility)

- Uses less memory and lower CPU load, making it possible to deploy on older laptops and thin-client setups.

- Leverages an efficient multithreading setup to remain stable and performant even under stressful compute conditions.

- Outputs 8kHz audio suitable for telephony applications.

The table below shows benchmarks on various CPUs.

Intel i3-6100 | Intel i5-10210U | Intel i7-1355U | AMD Ryzen5 5500U | AMD Ryzen3 7320U | |

AT2.9 CPU Usage | 9.0-11.4% | 7.0-8.5% | 2.8-3.9% | 3.2-4.3% | 8.0-9.5% |

AT4.0 CPU Usage | 6.9-8.8% | 5.4-6.8% | 2.3-4.3% | 3.2-4.8% | 4.2-6.2% |

AT4.0 Lightweight CPU Usage | 5.6-8.6% | 4.8-5.7% | 1.7-3.6% | 2.6-4.3% | 2.7-3.7% |

Breaking Barriers in Speech Understanding for People and Platforms

AT4.0 represents a turning point. This isn’t just about better performance, it’s about redefining what speech technology can deliver.

- Resilience: Technology that works in the real world and delivers clear, measurable impact.

- Authenticity: Voices that remain recognizably yours.

- Scalability: Lightweight options that meet users where they are, even on older computers and thin-client setups.

For our customers and partners, AT4.0 means faster ROI, broader accessibility, and future-proof technology. For voice AI agentic systems, it means higher accuracy, improved robustness, and a larger audience that was previously underserved. For end-users around the world, it means one thing: conversations that truly bridge speech understanding.

Sanas AT4.0 is a major leap forward, but it’s only the beginning for what’s to come. We are building toward a future where communication is seamless, inclusive, and uniquely human — no matter where you’re from or how you speak.

Appendix 1 - ASR Additional Examples

Oracle Transcript | Original Transcript | Sanas AT4.0 Transcript |

“just let me double check the note could you please provide me the verbal passcode” | "just let me the about check in could you please provide me the berap passcode” | “just let me double check that out could you please provide me the verbal passcode” |

35.7% WER | 14.3% WER | |

Oracle Transcript | Original Transcript | Sanas AT4.0 Transcript |

“we are just following up with you because our record show that you may qualify for new low cost or possibly even free health insurance programs” | “we are just following a p d because our records show that it may qualify for new low cost or possibly even free health insurance programmes” | “we are just following up with you because our records show that you may qualify for new low cost or possibly even free health insurance programs” |

28.6% WER | 4.8% | |

Oracle Transcript: | Original Transcript | Sanas AT4.0 Transcript |

“yeah hi this is rachel i m calling on a recorded line from your savings advisor how are you doing today” | “ya hi this is rachel i m calling on recorded line from your savings advisor harding today” | “yeah hi this is rachel i m calling on recorded line from your savings advisor how you doing today” |

22.7% WER | 9.1% WER | |

Appendix 2 - Intelligibility Additional Examples

Category | Original | AT2.9 | AT4.0 |

Audio Quality, Intelligibility, Speaker Similarity | |||

Audio Quality, Intelligibility, Naturalness | |||

Naturalness, Speaker Similarity |

Appendix 3 - Design of the Evaluation Dataset

Because Sanas Accent Translation is expected to perform at the highest level in diverse and challenging real-world conditions, our metrics and evaluations must reflect that complexity. Existing industry standards are not sufficient — as the trailblazer in Accent Translation, Sanas sets the benchmark and continues to push the frontier forward. That’s why we dedicate significant effort to curate, expand, and update our evaluation datasets.

To meet our quality standards, all evaluation data is transcribed manually and reviewed by audio experts for suitability. This pool of high-quality data then undergoes a selection process designed to reflect real-world complexity while avoiding biases that create blind spots. This is not trivial: removing all biases risks misrepresenting the real world, while accepting the data distribution as-is risks reinforcing existing biases.

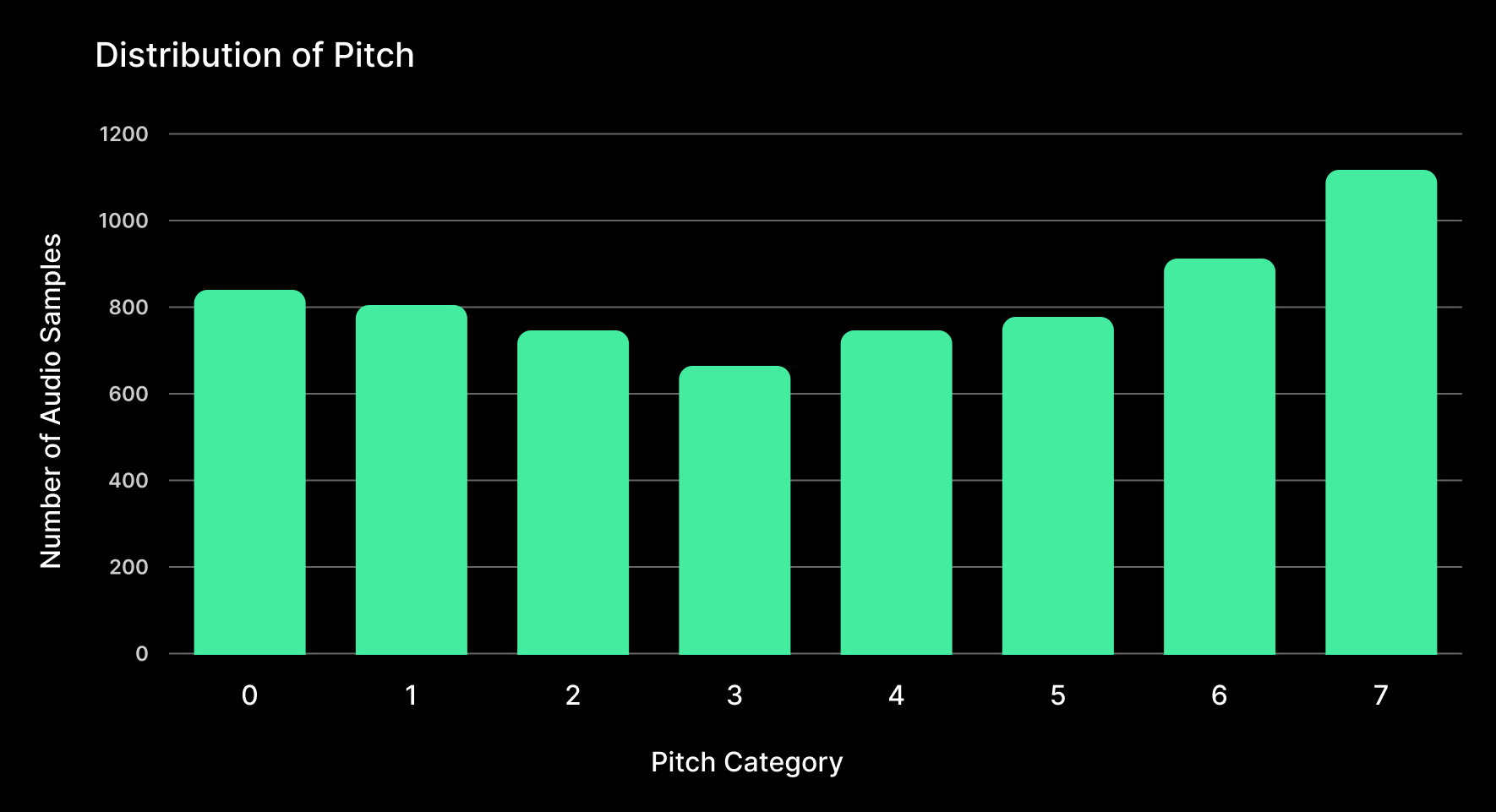

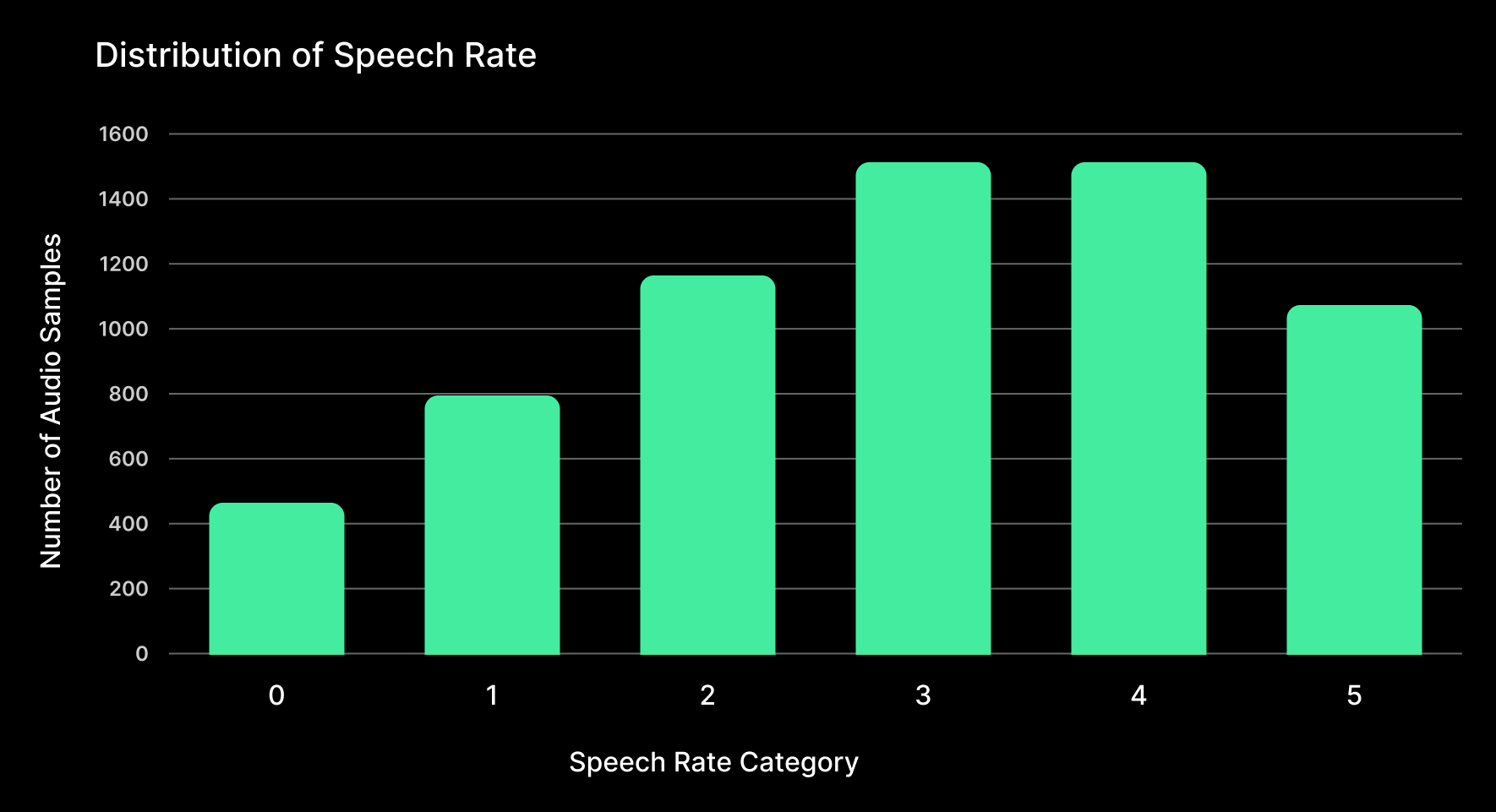

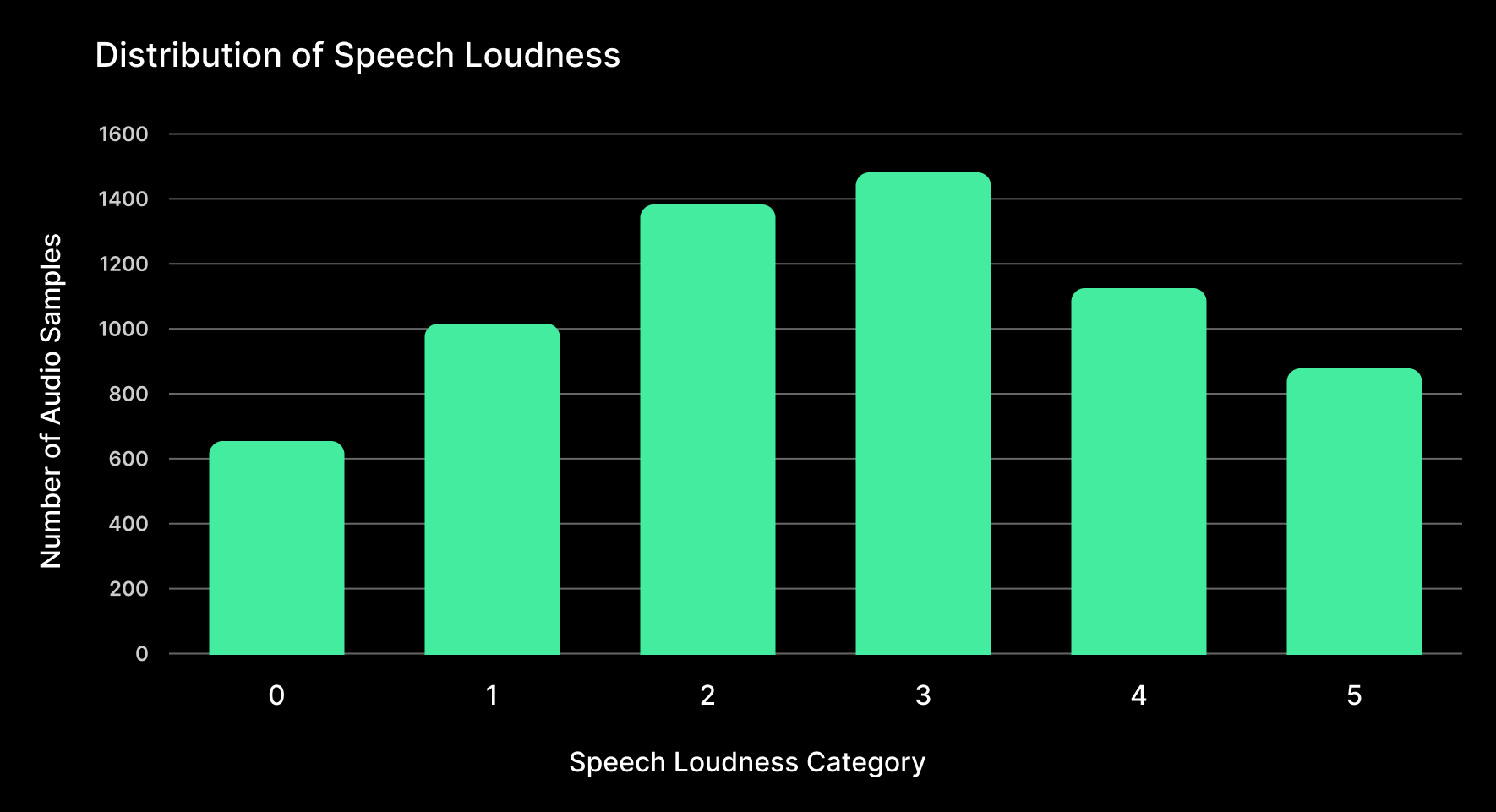

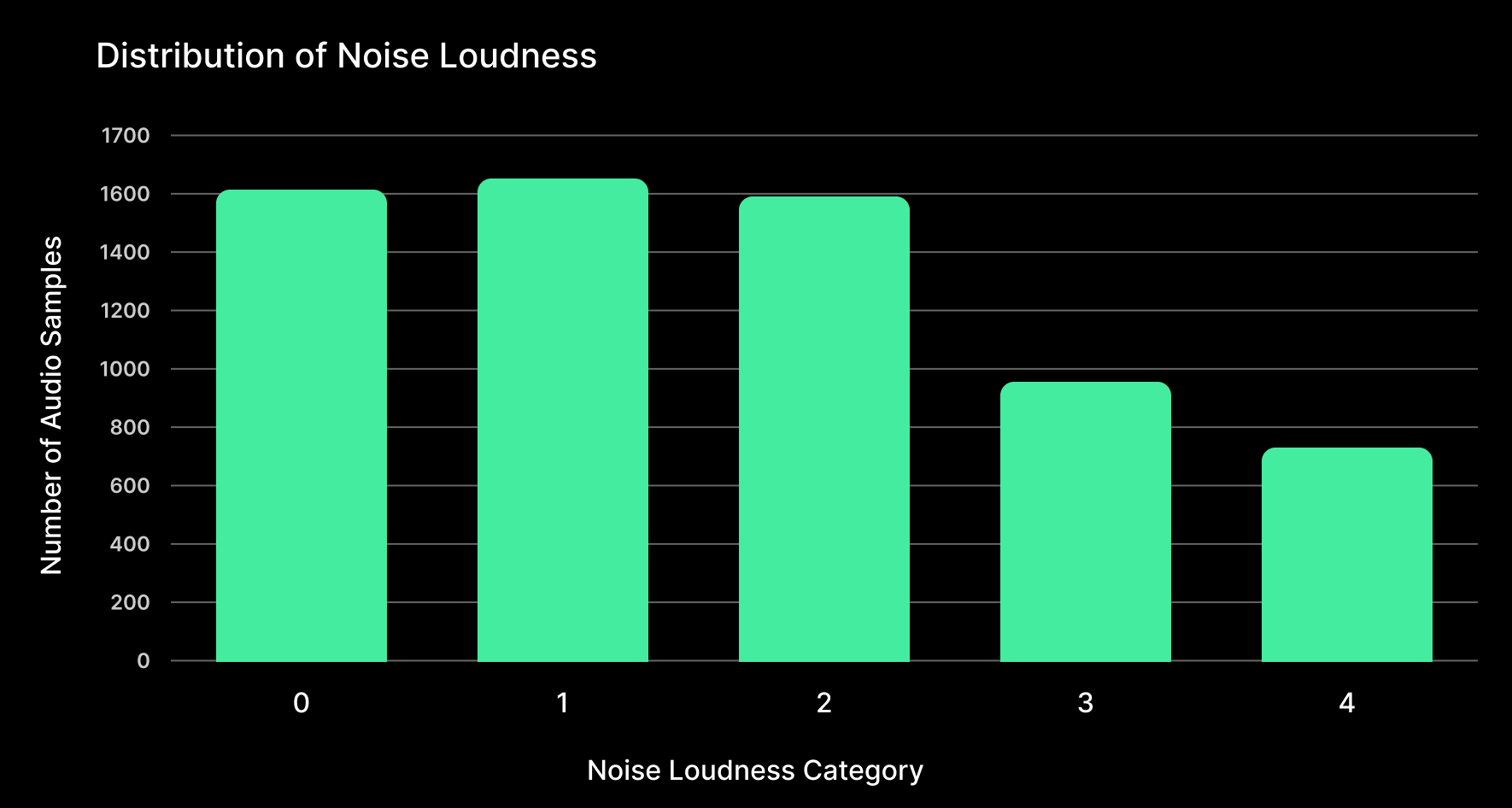

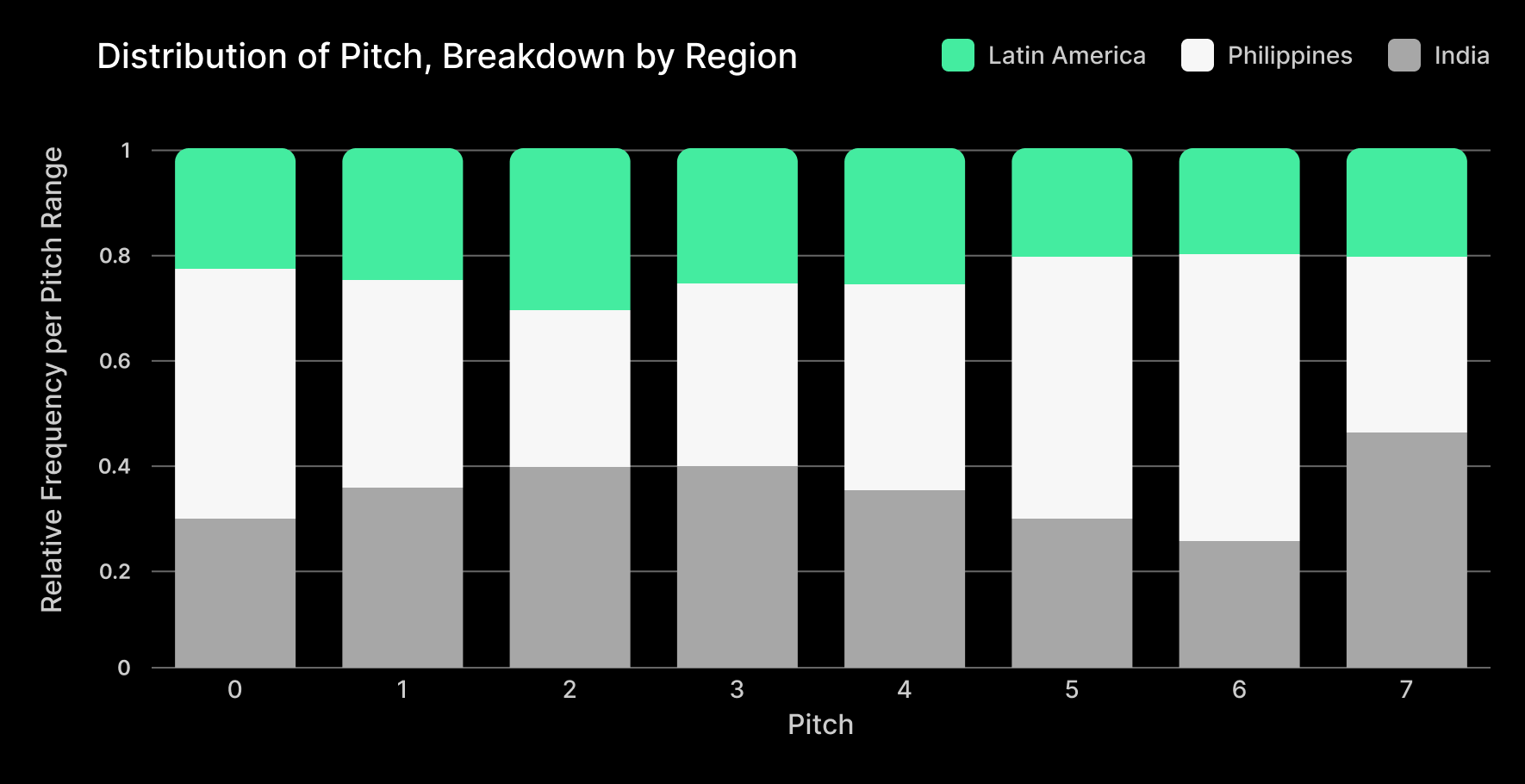

When creating and balancing our evaluation datasets, we account for multiple factors such as gender, geography, pitch range, speech loudness, noise level, speaking rate, and more. For each factor, we have defined target distributions based on statistical principles and boundaries set by domain experts.

Each audio sample in the dataset has a value for each of the above factors — for example, a Filipino speaker with a deep voice, speaking quietly at a moderate pace. To address biases in the dataset, Sanas has developed in-house algorithms that assemble the final dataset from our pool of quality-assured samples using iterative sampling techniques to achieve the closest possible match to the desired distributions.

The continued care, rigor, and technical sophistication of this process give Sanas the confidence to distinguish strong from poor performance, identify strengths and areas for improvement, and ensure Sanas Accent Translation continues to set new industry standards.

Appendix 4 - Evaluation Dataset Distributions by Factor

For balancing and interpreting results, we categorize our evaluation data by factor. A “naive approach” in categorization would be to divide each value range into equal parts, in other words, to segment them linearly. However, what appears reasonable numerically does not always translate into what is relevant to human perception. Human hearing mechanisms are non-linear in all aspects, as is well-documented by the field of psychoacoustics. For example, doubling the power of a sound does not make it sound twice as loud to us — it will sound less than twice as loud, depending on the frequency. Similarly complex relationships exist for the perception of pitch, noise loudness, and speech intelligibility at different speech rates.

We take these psychoacoustic factors into account when categorizing our evaluation data to ensure that each category represents a meaningful difference in speech perception and a distinctly different condition for our models to perform under.

After defining categories, the next key question is the balance. Here we aim to balance generally representative use cases alongside harsher conditions.

For example, very noisy conditions (Noise Loudness Categories 3-4) are rare in real-world conditions, but also mission-critical. We intentionally over-represent these conditions by at least 10x in our evaluation set to provide enough data for statistical significance.