Reclaiming the Full Spectrum of Human Speech: How We Built Real-Time Audio Upscaling from Low (8kHz) to High Fidelity (16kHz)

At Sanas, we care deeply about clarity and preserving the full richness of the human voice, not just technically, but emotionally. When we started thinking about real-time voice transformation, we realized that the limitations of low-fidelity audio weren’t just a quality issue, they were a barrier to understanding .

Low-fidelity, 8kHz audio became the industry standard in the 1970s because it was efficient. It enabled large-scale voice communication, but the tradeoff was substantial. With 8kHz sampling, only frequencies up to 4kHz can be captured. Everything above that is lost, including consonant bursts, upper harmonics, and vocal richness. At 8kHz, speech remains intelligible, but sounds flat, compressed, and more difficult to follow, especially in noisy or fast-paced conversations.

We set out to solve this challenge with Sanas Speech Enhancement. Our approach was not to replace existing infrastructure, but to build a system that can reconstruct high-frequency detail from low-fidelity, 8kHz input in real time. The output is full, 16kHz quality speech, but without changing the codecs, or transmission layer. Even better, Sanas Speech Enhancement runs entirely on the receiving device. You keep the efficiency of low-fidelity transmission, you hear the richness of high-fidelity speech.

In this article, we’ll explore how decades of legacy constraints shaped the sound of modern communication, and how we set out to reverse that by reclaiming the full human experience in every voice.

A Legacy Measured in Kilobits

The global standard for voice communication was set in the 1960s, when telephony infrastructure was designed around the limitations of analog switching and copper lines. By the early 1970s, sampling speech at 8kHz and encoding it at 64 kbps (using G.711 PCM standard) became the default, enough to carry the basic structure of the human voice given the constrained bandwidth limits back then.

Half a century later, much of that infrastructure still remains. From mobile networks to enterprise Voice over Internet Protocol (VoIP) systems, countless environments are still optimized for narrowband 8kHz transmission. Upgrading physical infrastructure, revising codec standards, and expanding bandwidth at scale is costly, slow, and geographically fragmented. The result is that most voice systems still operate under the constraints of a format designed for rotary phones. This legacy isn’t just technical debt, it’s a global bottleneck. And it’s one of the main reasons low-fidelity 8kHz speech remains so widespread.

How We See Sound

When we capture audio digitally, we’re sampling it, taking a rapid sequence of measurements of how the air pressure is changing over time. That’s what a microphone is really doing, translating the continuous movement of sound waves into a series of numbers. If the sampling rate is 8,000 samples per second (8kHz), then we’re storing 8,000 values for every second of sound.

These samples make up what’s called the time domain signal. If you were to graph it, it’d look like a familiar waveform, oscillations that rise and fall, like a sine wave. But while this view tells us how the signal changes over time, it doesn’t tell us much about what the sound actually contains.

Human voices, like all sounds, are made of a mix of frequencies. A single vowel might carry a fundamental tone along with a complex stack of harmonics above it, sometimes extending up to 10kHz. In the time domain, those components are all tangled together. To work with them, we need a different perspective. This is where the application of the Fourier Transform comes in.

The Fourier Transform is a tool that decomposes a signal into its frequency components. It shows what frequencies are present in the sound and how strong they are. Instead of viewing sound as a function of time, we view it as a function of frequency. This is the frequency domain.

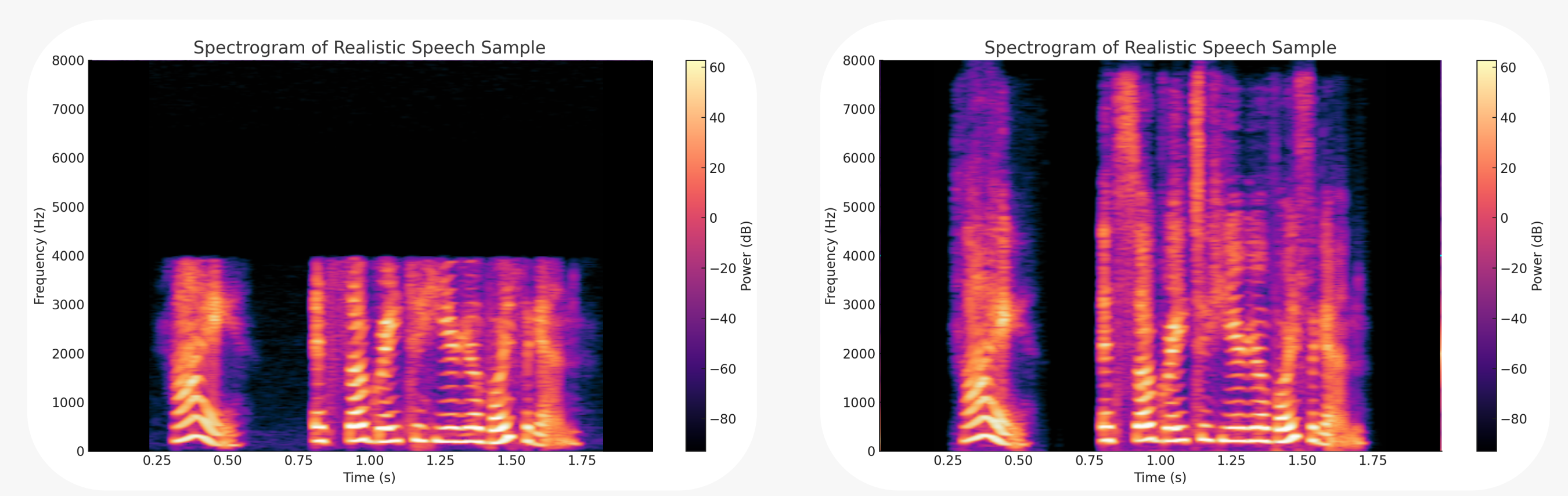

But speech changes constantly. A static frequency breakdown isn’t enough. So we use a variation called the Short-Time Fourier Transform (STFT). It breaks the signal into small overlapping windows and computes the frequency content of each slice. When we visualize these slices side by side, we get a spectrogram. The human cochlea within the ear performs this operation continuously via the small hairs, each tuned to a small range of frequencies.

A spectrogram is map of how sound evolves, a way to see what we’re hearing. It shows time on the x-axis, frequency on the y-axis, and amplitude as color.

At 8kHz sampling rate, the maximum representable frequency is 4kHz due to the Nyquist limit, which is half the sampling rate. Frequencies above that limit, high-frequency harmonics and fine-grained speech detail, cannot be accurately captured and are not recorded. It isn’t quiet, it’s absent.

In Figure 1, the 8kHz spectrogram shows a sharp cutoff at 4kHz, where all higher-frequency content is missing. In contrast, the 16kHz spectrogram retains the full range of vocal detail, capturing the additional harmonics and texture that give the voice its clarity and richness. In a later section, you'll be able to hear the audible difference between the audio shown in Figure 1.

The Technical Challenge and Our Approach

The difficulty in upscaling from 8kHz to 16kHz isn’t in stretching the signal, it’s in reconstructing information that was never captured. Frequencies above 4kHz don’t exist in an 8kHz recording. Naive approaches to upsampling can interpolate or smooth the existing signal, but they don’t restore the high-frequency detail that gives speech its clarity and presence. In many cases, this results in perceptual flatness, or worse, audible artifacts that distort the speaker’s natural voice.

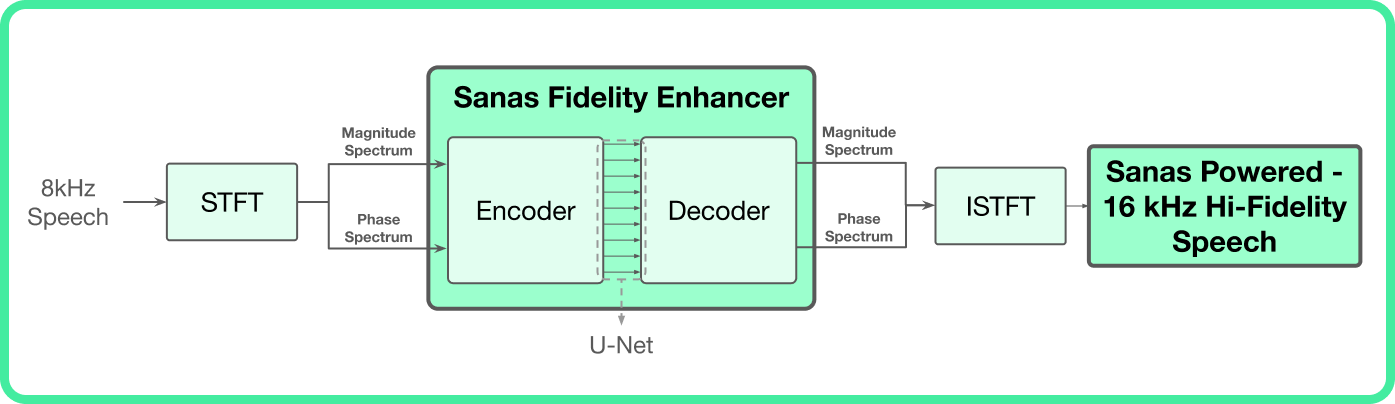

We designed Sanas Speech Enhancement to predict missing spectral detail while preserving the original signal. It runs in real time, with minimal latency, and adapts across microphones, codecs, and noise conditions. Achieving this level of fidelity under these constraints has never been done before. Real-time reconstruction of high-fidelity speech from 8kHz input sets a new standard for quality, speed, and scalability in the industry.

Our system leverages proprietary neural architectures, drawing on principles from U-Nets and advanced real-time vocoding, to reconstruct the information that low-fidelity audio never captured. We identify the statistical patterns that link low-fidelity input to the wide-band speech we expect to hear.

Sanas Speech Enhancement generalizes without needing to rewrite the signal or resynthesize speech from scratch. The final result is a high-fidelity output that remains true to the speaker, operating fast enough for real-time interaction.

What It Means to Hear More

Low-fidelity audio often appears sufficient until it’s compared directly to something better. At 8kHz, speech is technically intelligible, but the loss of high-frequency information makes it harder to parse. Much of what makes speech clear and effortless to understand is missing.

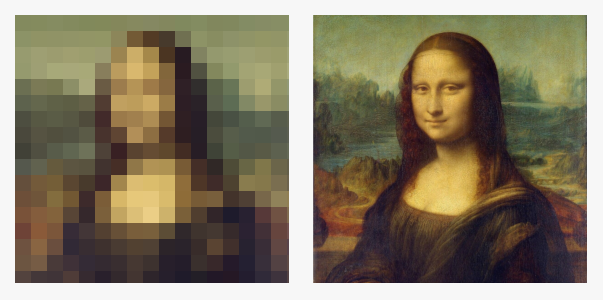

A visual analogy helps. Below is an image of the Mona Lisa, rendered at 17×17 pixels. At this resolution, you can tell it’s a portrait. But you can’t see her expression. You lose the shading, the subtle contours, everything that gives the image its depth. The structure is there, but the meaning is degraded.

This is what low-fidelity speech does. At 8kHz, you can still hear the sentence, but the clarity, texture, and sharpness are missing. The sharp edges of consonants are dulled, and the speaker’s voice starts to feel generic, stripped of the subtle cues that make it recognizably human.

With full-band audio at 16kHz, that detail is preserved. And with Sanas Speech Ehnancement, we can restore that level of detail, even when the original signal is narrowband.

Want to hear it in action? Watch the video below to hear audio before and after Sanas Speech Enhancement using the same audio file depicted in the Figure 1 spectrogram.

The first audio sample is the original 8kHz narrowband input, which is missing high-frequency content. The second sample is Sanas Speech Enhancement output generated from the 8kHz input in real time.

The difference you hear shows that fidelity is more than clarity, it’s the richness, depth, and nuance that make speech sound uniquely human.

It gets better. Because Sanas Speech Enhancement restores the missing detail in real time without changing the way audio is stored or transmitted, you get the full experience of high-fidelity speech, while retaining all of the cost-saving benefits of low-fidelity 8kHz audio.

Establishing a New Baseline for Speech Fidelity

Reconstructing high-fidelity speech from low-fidelity input requires operating across time and frequency, predicting missing components without altering the signal that remains, and doing it all fast enough for live communication. It’s a task that’s long been considered too complex for real-time systems, until now.

Sanas Speech Enhancement is one part of a larger effort at Sanas: using AI to fundamentally improve how people communicate. Not by discarding the signal, but by restoring what should have been there in the first place, regardless of bandwidth, hardware, or infrastructure.

It opens the door to a new class of real-time speech enhancements, built on the same principles: preserving what’s original, restoring what’s missing, and delivering results that stand up to native high-fidelity audio, both analytically and perceptually.

Sanas Speech Enhancement builds on top of noise cancellation, which removes background distractions while leaving the foreground untouched. We take it further, not just subtracting what doesn’t belong, but enhancing what does. Clearer, richer, more human speech is now possible, even in the most constrained environments. This isn’t the end, it’s the start of a new baseline.