Solving Live Language Translation: A Breakthrough Approach Using Real-Time Clause-Aware LLMs

For more than half a century, building a universal instant translator has been one of technology’s ultimate engineering goals. Imagine a device that lets you instantly understand anyone in your language, in their own voice. It could connect people across geography and culture in ways we’ve only imagined. Star Trek dreamed it, The Hitchhiker’s Guide to the Galaxy joked about it. Why has it taken so long?

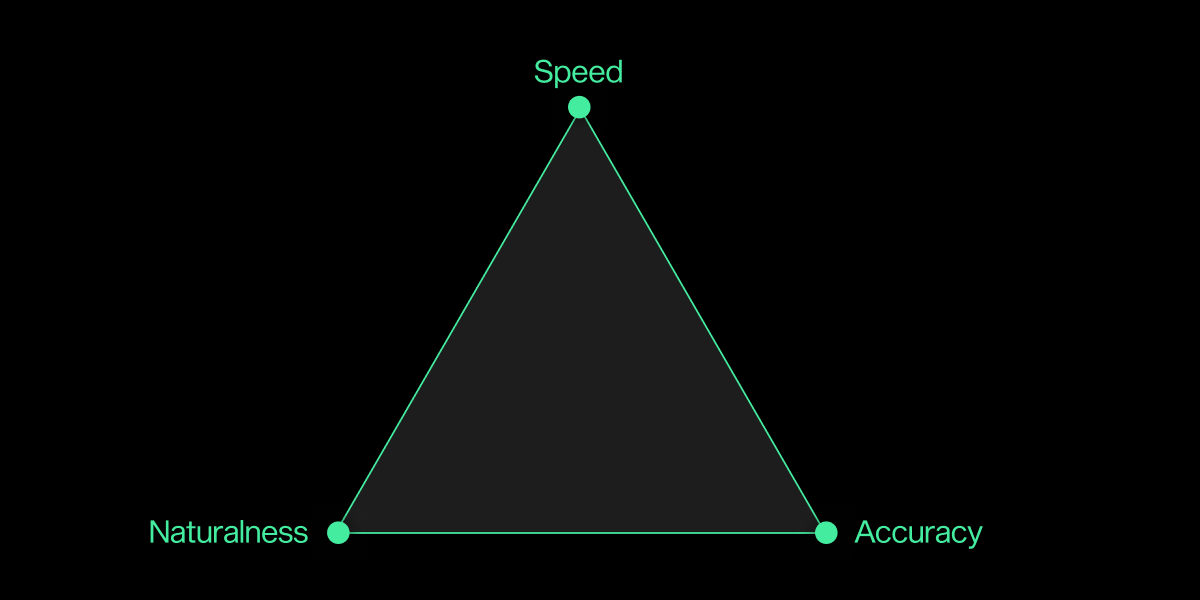

The problem is both hard and deceptively simple. Every instant voice translator today (or, more formally, simultaneous interpreter) falls short on at least one of three key requirements: speed, accuracy, and naturalness.

At Sanas, we believe a great experience requires all three, which we internally call the “translation trinity.” Yet even the most advanced systems claiming “instant translation” typically only achieve one or two criteria, which is why none have truly broken through.

We’re Jason and Scott, and together we lead the Language Translation team at Sanas. We both studied AI at Stanford, where we became fascinated by the intersection of multilingual speech and natural language systems. That passion led us to found Mida, a translation app to deliver contextually and culturally aware translations through LLMs. Our work has always focused on one question: how can AI understand language the way people do? After Mida was acquired by Sanas, we joined the Sanas team to bring that vision to life at scale, building a system capable of instant, clause-aware speech translation.

Over the past six months, our small but talent-dense team has been building a brand-new system from the ground up to take on the translation trinity and finally break the language barrier. We’ve built what we believe is the most advanced live language translator available in production today.

In this article, we'll share the story behind how we leveraged LLMs to solve language barriers.

Challenge 1: Solving Accuracy in Language Translation

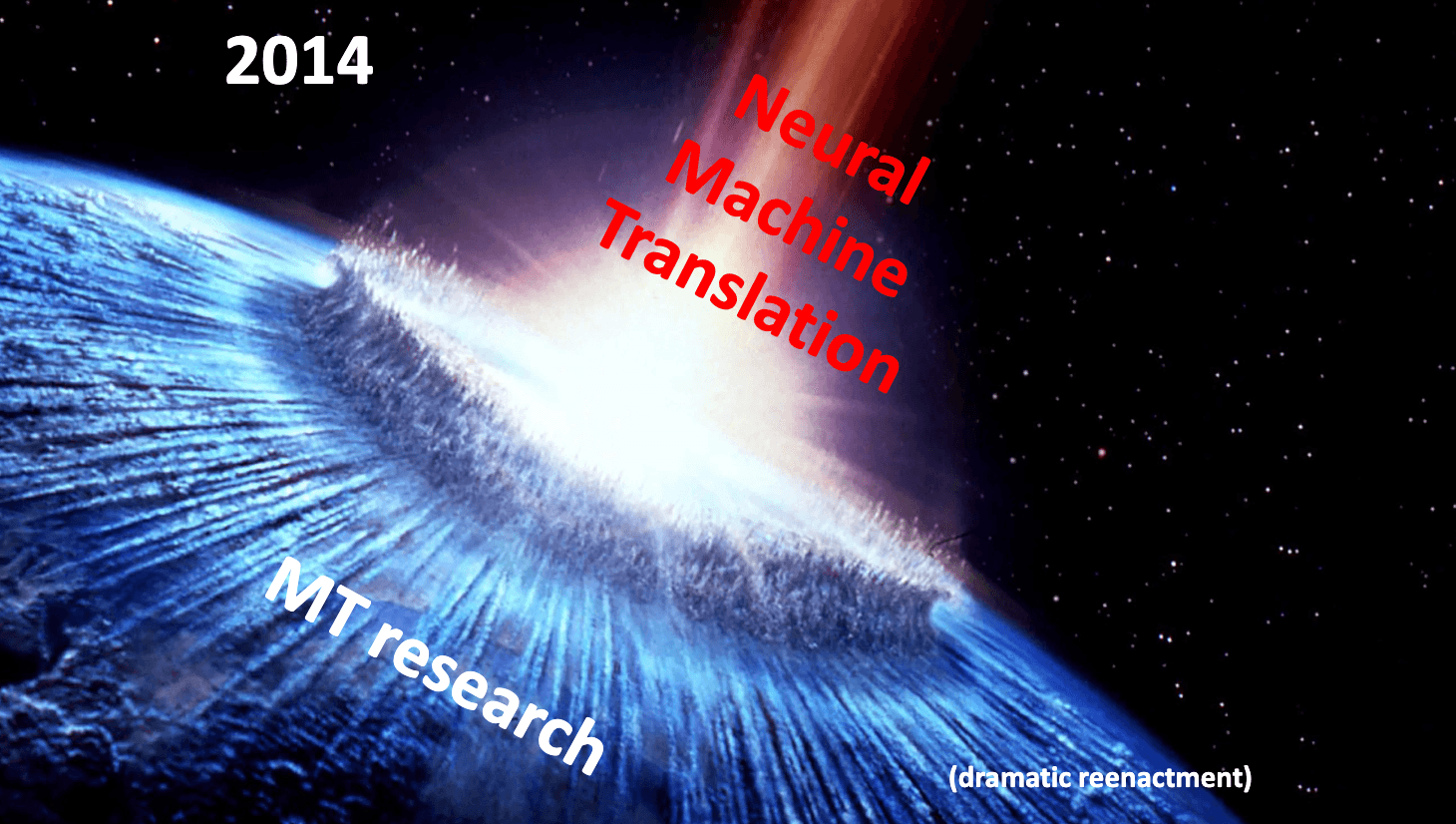

Non-engineers may be surprised to hear that within the machine-learning community, accuracy in language translation is considered a mostly “solved problem” — rare languages aside. This is because the invention of neural machine translation more than a decade ago solved many of the biggest issues that once limited quality.

Famous slide from Stanford’s CS224N AI class illustrating the effect of neural machine translation on the translation research community.

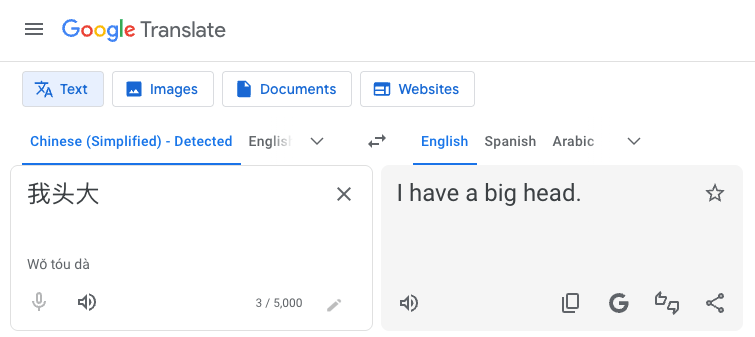

And yet, if you have familiarity with another language, you’ll know that “accurate” translation can still miss the mark. Machine translations with word-for-word precision don’t guarantee that a phrase sounds natural or culturally right. Google Translate still translates a Chinese expression that means "I have a headache” to "I have a big head."

We want translations that are contextually aware, culturally fluent, and faithful to intent. But how can a computer grasp the nuance behind a sentence?

That’s where LLMs come in. Large language models are stellar at understanding surrounding context: topic, tone, formality, idioms. Specifically, with some prompting, you can wrangle an LLM to:

- Understand tone (casual vs. professional) based on conversational cues.

- Choose culturally natural paraphrases rather than brittle word-for-word mappings.

- Follow our guardrails (terminology lists, domain hints, structure-preserving prompts) so translations stay faithful to the source.

With this approach, our system produces translations that capture both intent and tone, not just literal meaning. But that raises the next challenge: even the most accurate translation won’t feel natural if it arrives too late. Can we do it fast enough for live conversation?

Challenge 2: Solving Speed in Language Translation

If a translator is slow, it creates an awkward back and forth delay that breaks the natural flow of conversation. Yet languages differ fundamentally in word order, so a small delay is essential to guarantee accuracy. How do we find the right level of delay?

We found our answer in a surprising place: Monterey, a picturesque coastal city about two hours from our Palo Alto office. Thanks to its history of diplomacy and international studies, Monterey boasts an unusually large concentration of human language translation talent, so much so that it earned the nickname “the language capital of the world.”

Image: Monterey, California. Home to one of the largest interpreter communities in the U.S.

We were inspired by the “simultaneous interpreters” common in Monterey. Rather than waiting for each party to finish their sentence, simultaneous interpreters begin translating while the speaker is still talking to minimize the delay. You may have seen this technique during United Nations speeches or during live press conferences. It’s an extremely difficult skill to listen, process, and speak at the same time, often about complex policy topics. Hats off to the interpreters!

While reading an interpreter training manual, we noticed that interpreters wait for the first part in the sentence where a complete thought (or more formally, a grammatical clause) has been expressed. These natural boundaries enable them to break a sentence into smaller units and begin speaking as soon as a thought is stable. This same cue helped us train our system to detect turning points and predict necessary delay.

By modeling our system on the best practices of the world’s most skilled interpreters, we’d figured out how to build a translator that could listen and respond fluidly. With speed and accuracy in place, the final challenge was the most human one of all: making sure the translated voice still sounded like the person speaking.

Challenge 3: Solving Naturalness in Language Translation

Even the most accurate translation can feel incomplete if it doesn’t sound human. Audio translations shouldn’t sound like a robot or a random voice, they should sound like us. They need to carry laughter, warmth, sadness — the whole gamut of human emotion that our wonderfully expressive voices can communicate.

When we got to this final side of the translation trinity, we realized we had a unique advantage. Sanas had already pioneered real-time speech AI that preserves the individuality of a speaker’s voice. That foundation gave us a highly performant architecture to capture the timbre, intonation, and pacing that make every voice distinct.

To keep transitions smooth, we also dynamically crossfade the audio, like a radio DJ, smoothly switching from the user’s original voice to the translated audio. The experience is a voice that feels consistent, expressive, and unmistakably yours.

Challenge 4: Validating the Technology by Measuring the Translation Trinity

After building a system that could listen, think, and speak in real time, we needed to know if it actually worked in the real world. We built a comprehensive set of benchmarks to verify that our system works over our “translation trinity” of accuracy, speed, and naturalness.

1. Speed

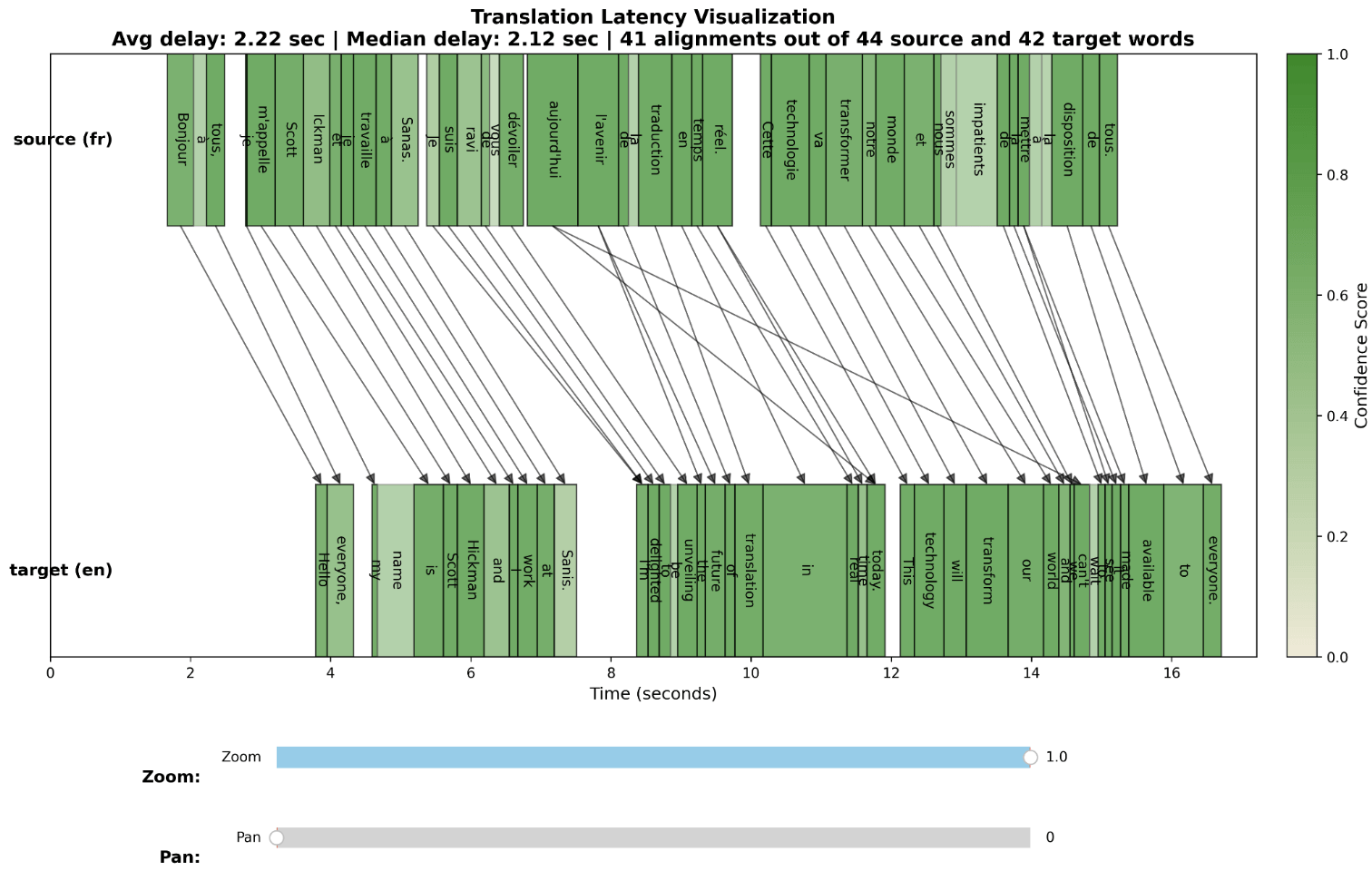

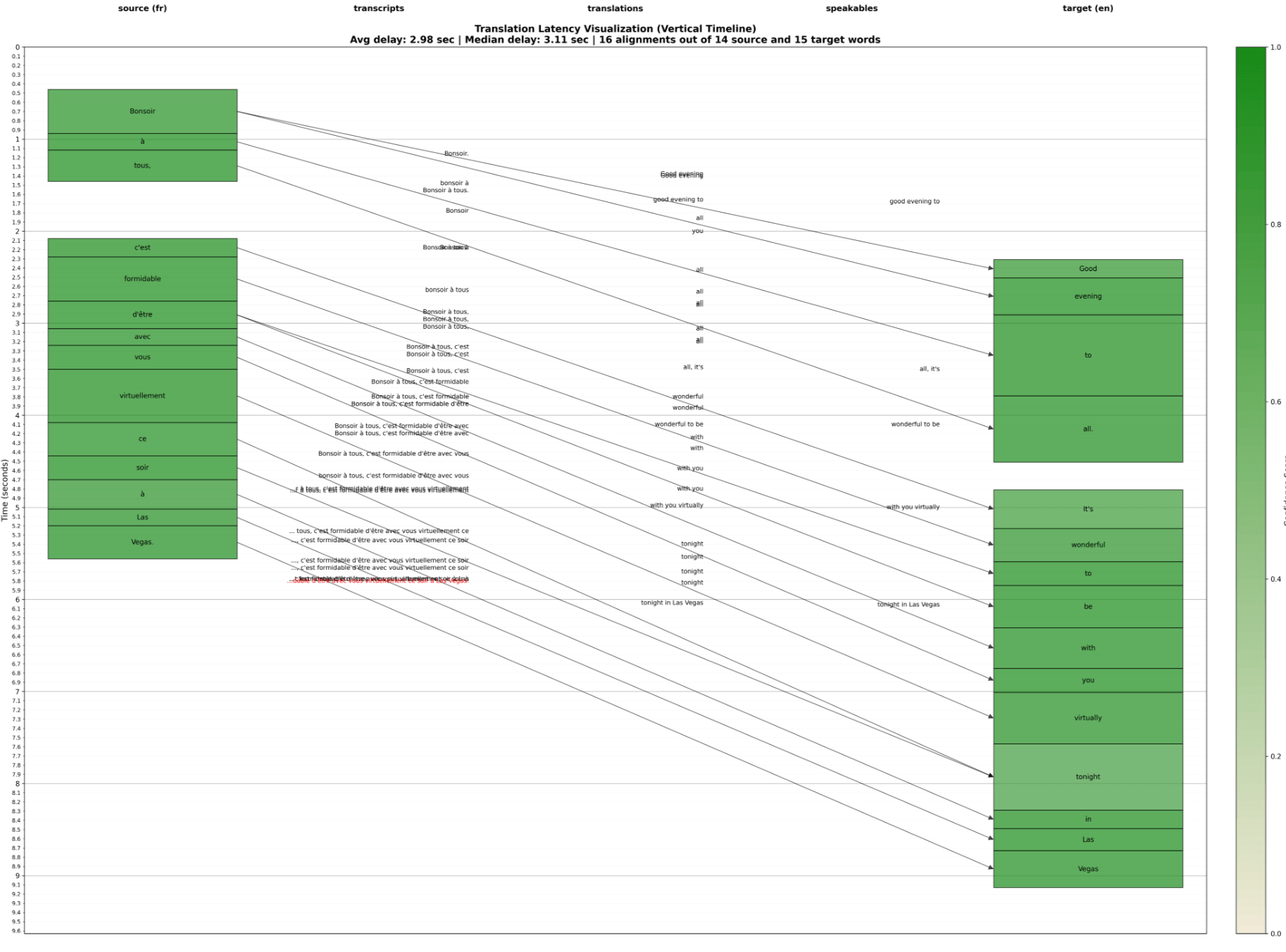

To measure real-time performance, we built a test harness that ingests audio, translates it live, and measures two key timing metrics:

- TTFW (Time to First Word): how quickly the system begins speaking

- TALW (Time After Last Word): how long it takes to finish once the speaker stops

Across multiple benchmarks, our system has an end to end delay averaging 2 seconds, with a minimum of around 1.5 seconds, depending on sentence characteristics and language pair.

We’ve seen other systems advertise near-instant translation, but in practice they often cut corners — translating incomplete input or, worse, outputting something no one actually said. As we learned when solving for speed, because languages differ fundamentally in word order, a small delay is unavoidable. That’s why our metrics reflect real world conditions, not idealized demos. Beware of false claims and demos, only trust what you can try yourself!

To show how we measure progress, we've included charts featuring results from early-stage versions of our system. They don’t reflect our latest performance, but they capture the journey and the framework we still use to evaluate every model.

2. Accuracy

We take a two-pronged, human-centered approach to accuracy evaluation that combines automated scoring with human evaluation. While automated metrics are all the rage these days, we find they often miss the contextual nuance that only humans can perceive. We designed a hybrid approach combining the best of both: automated metrics for testing over millions of sentences, and human evaluation for understanding meaning in context.

Our automated tests run diverse metrics on a variety of datasets, optimizing for sentence and vocabulary coverage.

- We use common metrics such as BLEU (Bilingual Evaluation Understudy), METEOR (Metric for Evaluation of Translation with Explicit ORdering), and TER/WER (Translation or Word Error Rate) to measure how closely our translations match reference sentences at the word and phrase level. We also use xCOMET, a neural evaluation model that compares translations in context to assess whether meaning and tone are preserved, not just the wording.

- We source data from a mix of internal tests and standardized benchmark sets such as FLORES-101, a multilingual dataset designed to measure translation quality across 100+ languages, which we’ve augmented with TTS (text-to-speech) to simulate real spoken input.

The result: our BLEU score on the FLORES-101 dataset is 47.6, meaning our system — which hears and translates sentences word by word — outperforms systems that see the entire sentence upfront.

For human testing, we have several sources, including:

- User ratings in the Sanas mobile app, which is already used in 70+ countries. This feedback is a gold mine, surfacing insights on languages that would have been hard to gather ourselves.

- Internal testing across more than 20+ languages. Our small translation team alone speaks 8 languages combined (we’re all immigrants or children of immigrants!).

- Human evaluation of translations with post-editing, corrections, and feedback (HTER, etc.) which measures how much human effort is required to improve a machine translation.

This hybrid approach gives us a clear picture of how well our system understands not just the words people say, but what they mean.

3. Naturalness

Rather than relying solely on benchmark scores, we evaluate naturalness based on real-world feedback. Within our mobile app, we ask users to rate how well the translated voice matches their own, then track those scores over time to measure improvement.

See it in action in the video below!

Parting Thoughts

At Sanas, we have a unique focus on building real products that can serve millions, not just research demos. I invite you to try out the early mobile app research preview of our Language Translation system — and share feedback in the app, which we’ll use to continue building and improving.

We believe translation isn’t just an engineering problem; it’s a human one. Working with language means thinking about diverse cultures, norms, and preferences. It’s deeply cross-disciplinary, unravelling both the science of language and the art of communication. It’s a linguistics, engineering, UX, HCI, design, and systems problem rolled into one. And for all its multi-dimensionality, we find language translation a meaningful and strikingly beautiful problem to solve.

Ultimately, the perfect system should feel invisible. It should not feel like a tool, but rather an extension of the self — so natural you forget it’s there. When that happens, machine-generated translations should feel so smooth and intuitive that the existence of the translation system fades into, as Mark Weiser described in 1991, invisibility and ubiquity.

We know we’ve only just started, and there is a long road ahead. But we’re energized by how far we’ve come and by what’s possible next.

If this vision resonates with you, we’re hiring! Come join our small but driven team of former Stanford, Google, Citadel, and Apple engineers, and help us build the world’s most natural real-time translator. Check out our Careers page or reach out directly to jason.lin (at) sanas.ai.

Today, a perfect storm of new technologies has made solving the language barrier finally achievable. Come build with us!