Inside Sanas Speech Enhancement 1.0: The Science Behind Real-Time Voice Clarity

At Sanas, our mission is to make every voice universally clear and intelligible, no matter the noise. With the launch of Speech Enhancement 1.0, we’re taking a leap forward.

Speech Enhancement 1.0 truly understands the soundscape and adapts in real time, preserving clarity and intelligibility in every voice. It combines a new type of neural network architecture, with advanced, acoustically accurate, training data simulations to deliver unprecedented clarity and robustness. It’s designed for the messiest, most unpredictable conditions, from open offices and call centers to cars, cafés, and city streets.

In this article, we explore two primary innovations that make Speech Enhancement 1.0 a breakthrough in real-time communication, with performance verified on real-life recordings across objective metrics compared with a leading commercial competitor:

- Dual-Decoder Architecture: A new model design inspired by how humans produce and perceive speech.

- Acoustic Simulation for Voice Isolation: A new training data simulation paradigm based on the physics of sound propagation.

Together, these advances redefine what “clean audio” means for enterprises, developers, and everyday users.

The Challenge

Picture yourself on a call in a crowded cafe, on a noisy street, or in the family car. In all these moments, your voice competes with a wall of sound — chatter, traffic, clattering dishes — until it becomes unintelligible to the person on the other end. These aren’t rare edge cases; they’re the reality of modern communication.

Over the years, engineers have tried to fix this through acoustic microphone arrays, classical signal processing, and, more recently, AI-based algorithms. Yet every approach runs into the same three fundamental problems:

- Noise and Voice Leakage: Background chatter and unpredictable sounds often slip through, especially in environments with multiple people speaking at once.

- Speech Deletion: Conventional algorithms sometimes mistake parts of speech for noise, removing important phonetic details that carry emotion and meaning.

- Deteriorated Speech Quality: Even when voices are isolated successfully, the resulting audio can sound metallic, muffled, or distorted.

AI models have made significant progress, yet even the most advanced systems continue to struggle in dynamic, multi-speaker environments where background voices leak through and clarity drops as soon as acoustic conditions change.

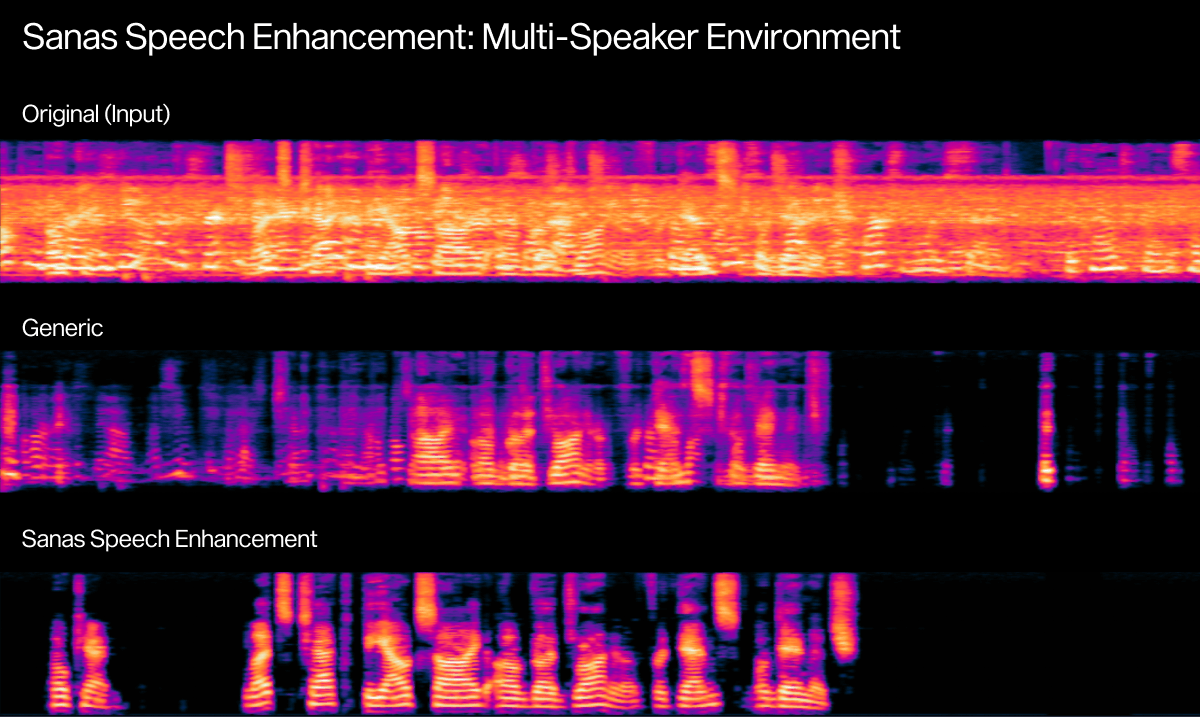

Original (Input) | Generic | Sanas SE1.0 |

Multiple speakers in the background | Deteriorated audio quality, noise/voice leakage, missing foreground speech | Clean isolation and preservation of the foreground speech |

The difficulty lies in the complexity of real-world sound. The same algorithm that performs beautifully in a quiet room can break down when someone moves, when reverberation changes, or when new voices enter the mix. Solving this requires a model that can distinguish not just what is sound, but who is speaking — and how far they are from the microphone — all in real time.

That’s the problem Speech Enhancement 1.0 set out to solve.

The Sanas Solution: How Speech Enhancement 1.0 Works

Solving this problem required rethinking how speech is distinguished from noise, from data generation through model architecture. The Sanas science team began by tackling three underlying challenges that have limited every prior approach.

First, real-world audio is chaotic. The model must handle extreme variation in acoustics and signal conditions — everything from crowd noise and room echo to interference, distortions, and moving microphones — while preserving the clarity of the human voice. To overcome this, we built a comprehensive acoustic simulator capable of generating realistic training data that captures how voices and environments interact, allowing Speech Enhancement 1.0 to perform reliably across virtually any condition.

Second, real-time performance demands efficiency. Speech Enhancement 1.0 had to be compact enough to run in real time on consumer devices, yet expressive enough to distinguish speech from non-speech. In practice, these signals often overlap, making it difficult to extract speech from background babble without erasing meaningful details. We solved this by developing a novel neural network architecture that balances compactness with high representational power, preserving both performance and natural sound.

Third, the objectives themselves often conflict during training. A model must isolate a speaker’s voice, but not isolate background participants. It must ignore a nearby voice, unless that person is part of the same call on speakerphone. These “singularities” represent real communication scenarios that conventional systems can’t consistently handle. We addressed this by designing a framework where singularities are no longer edge cases but well-defined use cases, teaching the system to adapt intelligently to conversational context.

At Sanas, this multidisciplinary approach draws from expertise that goes far beyond AI alone. Some of our scientists once designed concert halls; others specialize in linguistics and signal processing. Together, that blend of acoustics, language, and AI insight enabled us to redefine what real-time speech enhancement can achieve.

Innovation 1: A Dual-Decoder Architecture Inspired by Human Speech

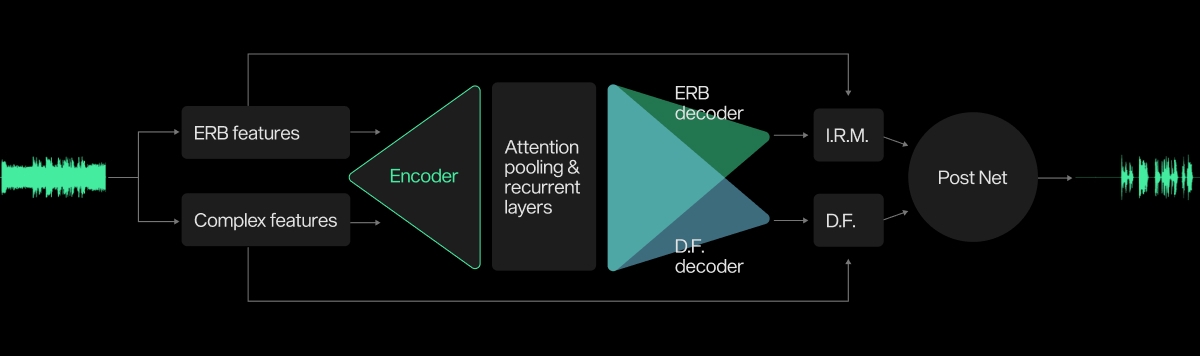

Every human voice contains two distinct components: harmonics (the periodic, structured tones that form vowels) and noise-like sounds (aperiodic components that form many consonants like ‘s’ and ‘f). Most systems process these components the same way, but speech isn’t uniform across the spectrum. Recognizing this, we designed Speech Enhancement 1.0 to treat these regions differently, much like the human ear does.

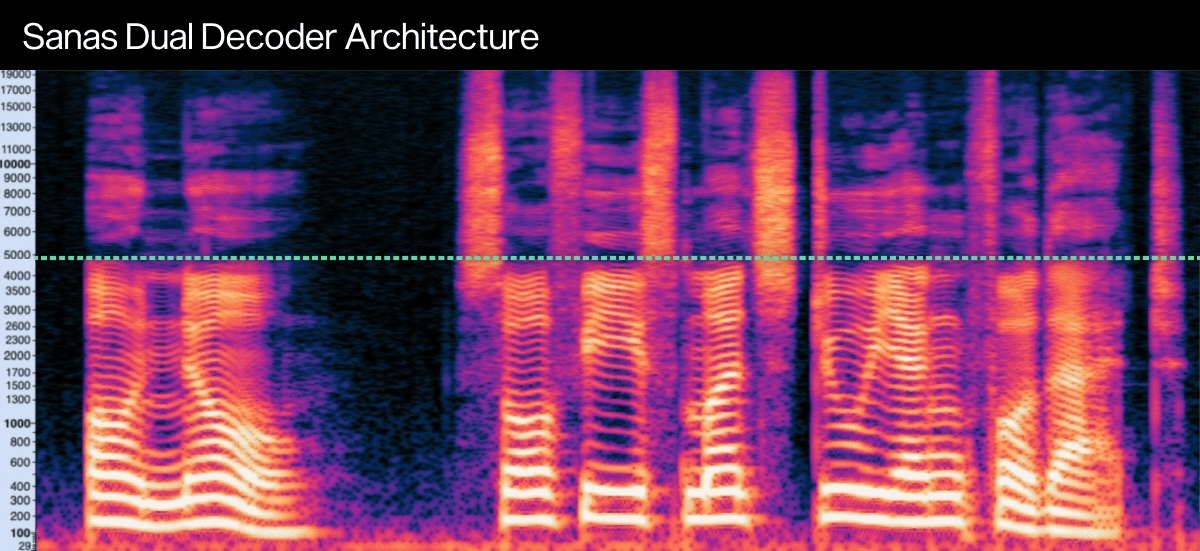

This insight led to a dual-decoder architecture: one encoder feeding two specialized decoders — each optimized for a different part of the signal. In the clean speech spectrogram below, harmonics (below 5 kHz) appear as distinct horizontal lines, while formants (above 5 kHz) appear as diffuse energy bands.

Above 5 kHz, Speech Enhancement 1.0 applies an ideal ratio mask on ERB (Equivalent Rectangular Bandwidth) features, which more closely mimic how the human cochlea perceives sound. Unlike the conventional Mel scale, which compresses high frequencies and loses detail, ERB captures the subtle noise-like elements (the fricatives and sibilance) that make speech intelligible and natural.

Below 5 kHz, Speech Enhancement 1.0 applies deep filtering on complex time-frequency features, a technique that predicts finite impulse response (FIR)-like coefficients and filters across time steps in the short-time Fourier transform (STFT) domain. This models temporal relationships and harmonic continuity with exceptional accuracy. For readers interested in our full experimental setup and model-training details, see the Appendix for additional methodology notes.

Frequency Region | Audio Representation | Processing Method | Focus |

Above 5 kHz | Equivalent Rectangular Bandwidth (ERB) | Ideal Ratio Mask | Noise-like components (fricatives) |

Below 5 kHz | Complex Spectrogram | Deep Filtering | Speech harmonics (vowels, tonality) |

Speech Enhancement 1.0 processes low and high frequencies differently, combining ERB-based masking above 5 kHz with deep filtering below 5 kHz for more natural, high-fidelity speech reconstruction.

In short: the dual-decoder design enables Speech Enhancement 1.0 to isolate what matters most — the human voice — with unmatched fidelity and realism.

Innovation 2: Acoustic Simulation for Voice Isolation

In real life, conversations don’t happen in controlled acoustic chambers. They happen in cars, restaurants, open floor-plan offices, and countless other situations. To make Speech Enhancement 1.0 effective in those environments, Sanas needed to teach the model not just what a voice sounds like, but where that voice is coming from.

Imagine yourself taking a meeting on your laptop at a restaurant table with colleagues chatting and surrounded by other patrons. Most existing voice-isolation systems rely on simple energy-based or speaker-ID approaches. These approaches suffer from well-known drawbacks and fail in many edge cases.

To overcome these limitations, Sanas developed a physics-based acoustic simulator that reproduces how sound behaves in real spaces. Our simulator models how sound energy decays and reflects across different distances and surfaces, allowing Speech Enhancement 1.0 to learn the acoustic cues that indicate how far a voice is from the microphone. During training, voices were generated across three distinct “distance regimes”:

- Close range (0–1 meter) — The voice is loud and “dry,” with almost no reverberation.

- Medium range (1–3 meters) — Direct sound energy begins to mix with reflections from nearby surfaces.

- Far range (3+ meters) — Reverberation dominates, and the voice’s specular (dry) component becomes faint or inaudible.

By accurately simulating these conditions, Speech Enhancement 1.0 learned to distinguish primary and secondary speakers — deciding which voices to preserve and which to suppress based on acoustic distance rather than arbitrary thresholds.

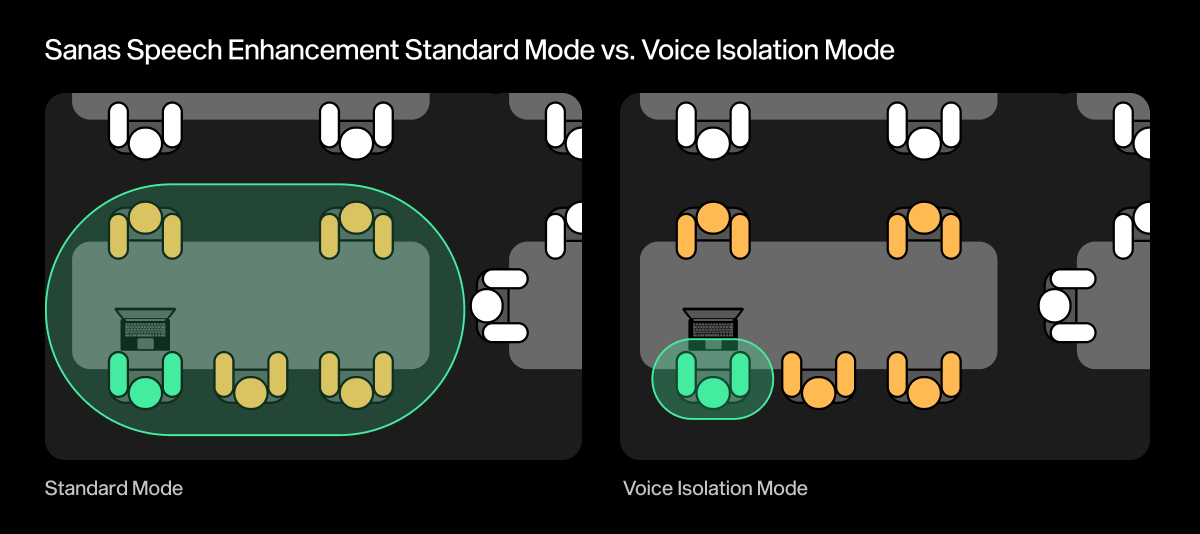

This distinction powers Speech Enhancement 1.0’s two operating modes:

- Standard Mode: Keeps all voices within close and medium range (regimes 1 and 2), allowing natural group conversations while removing environmental noise.

- Standard Mode preserves voices near the microphone for a natural multi-speaker experience.

- Voice Isolation Mode: Retains only the nearest voice (regime 1), suppressing other voices and ambient noise for maximum clarity.

- Voice Isolation Mode keeps only the primary speaker, removing both noise and background chatter.

Original (Input) | SE1.0 Standard Mode | SE1.0 Voice Isolation Mode |

Training on these simulated environments helps Speech Enhancement 1.0 recognize the primary speaker and decide when to include nearby voices or suppress them. The result is a model that adapts naturally to real-world conversations: in a meeting, it preserves colleagues’ voices; on a private call, it keeps only yours.

This approach is especially powerful in ambiguous situations where generic models tend to fail. In the example below, multiple speakers are at the same distance from the microphone, a configuration that many conventional solutions cannot handle reliably.

Original (Input) | Generic | Sanas SE1.0 Standard Mode |

Results and Audio Samples

We compared Speech Enhancement 1.0 against a leading competitor using internally developed test sets made up entirely of real-life recordings. At Sanas, we believe that synthesized samples or low-noise public datasets cannot accurately represent real-world conditions. That’s why every sample in our evaluation reflects an authentic, everyday environment along with a range of edge cases and unpredictable outliers designed to test robustness.

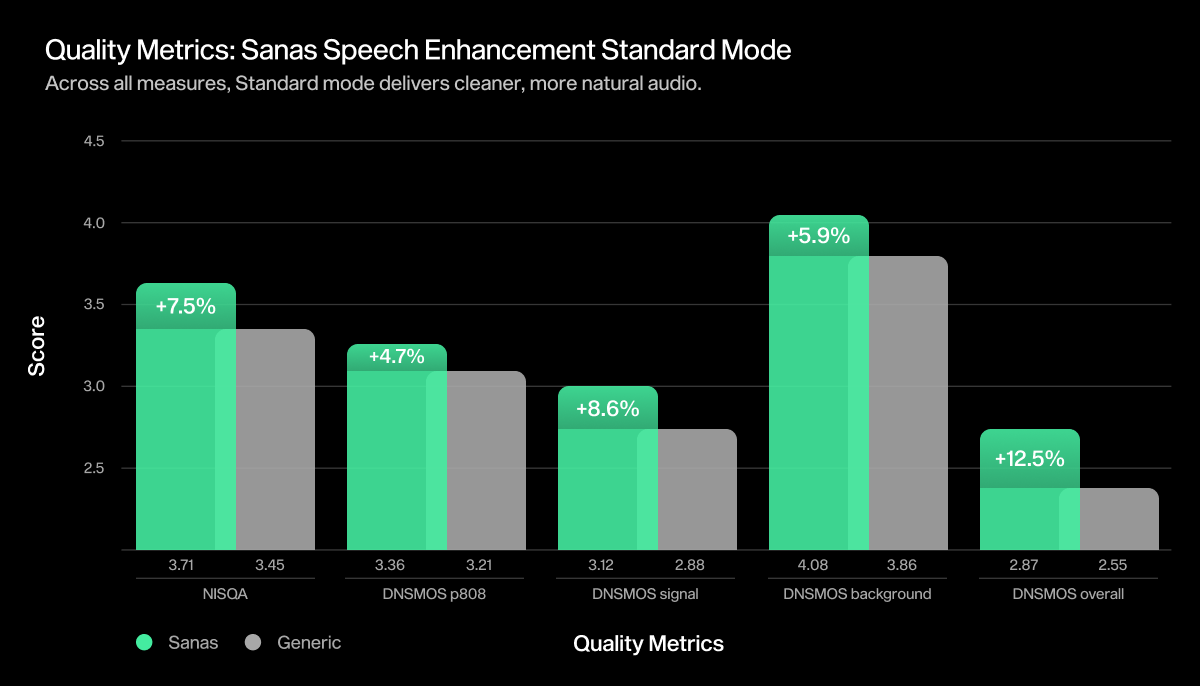

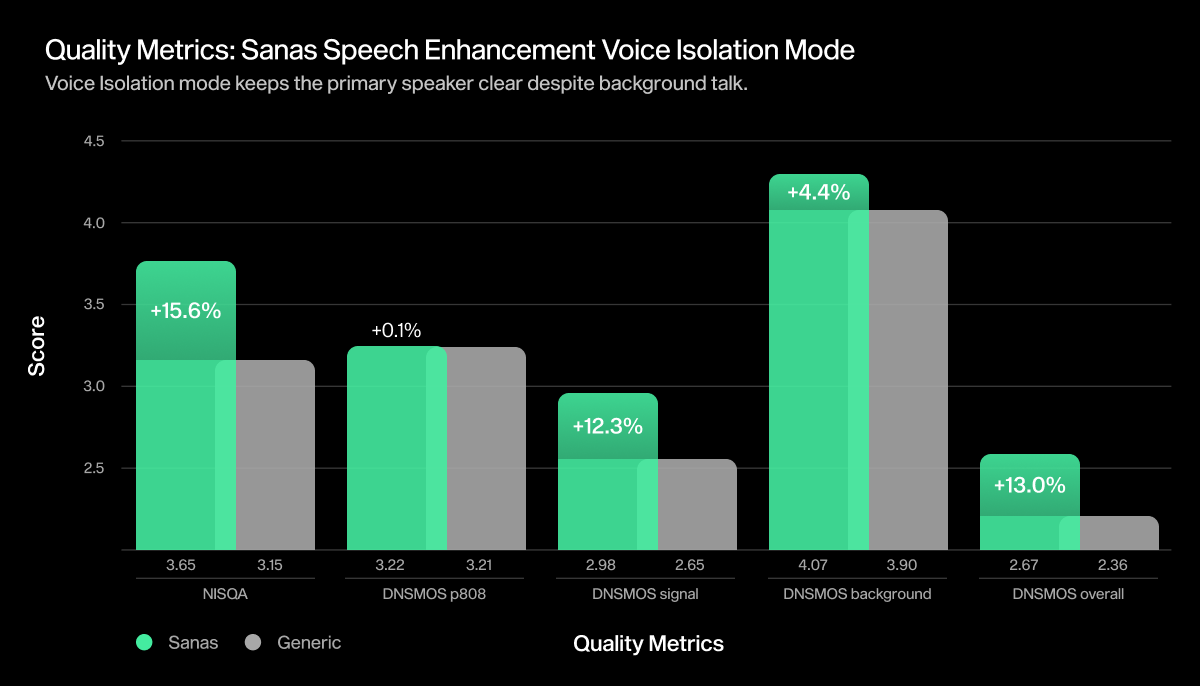

The results speak for themselves. In both operating modes, Speech Enhancement 1.0 consistently outperforms in both enhancing speech despite background noise and voice isolation, delivering cleaner, more natural speech across a wide range of acoustic conditions. Readers interested in the full list of objective quality metrics (NISQA, DNSMOS, and more) can find detailed definitions and references in the Appendix.

Test 1: Standard Mode

Speech Enhancement 1.0’s Standard Mode removes distracting sounds while preserving nearby voices for natural group conversation

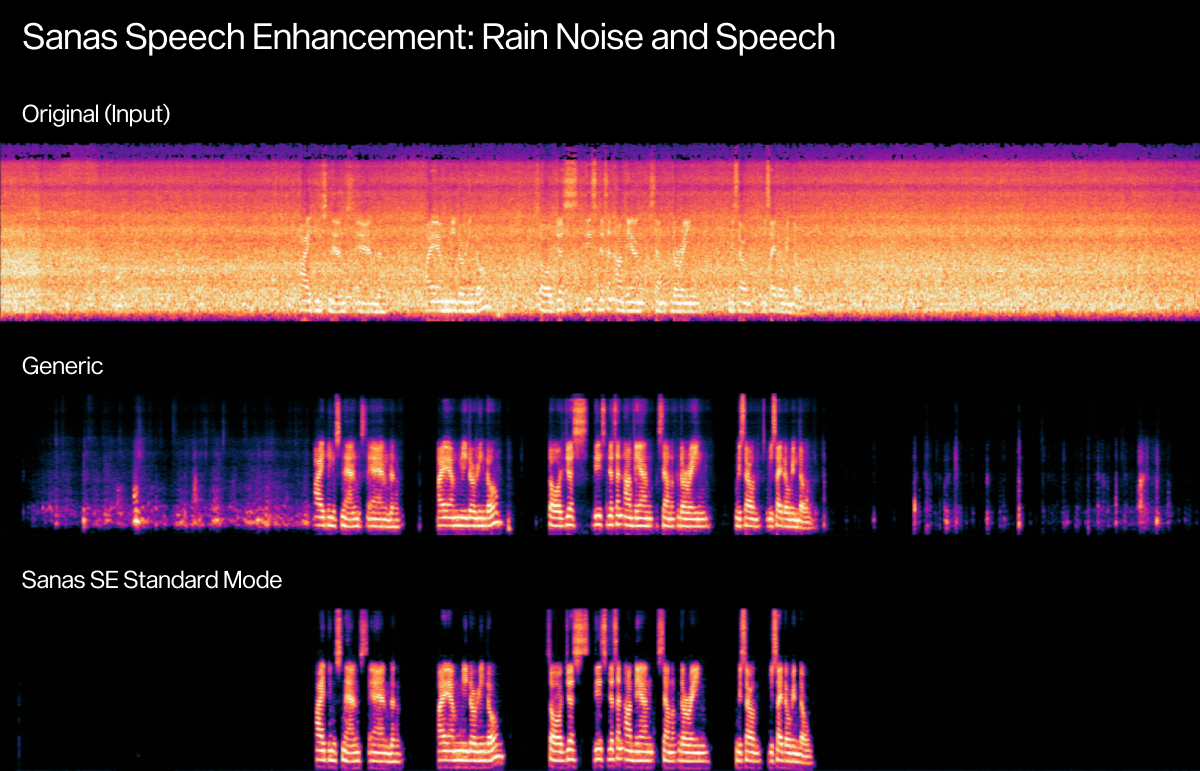

Original (Input) | Generic | Sanas SE1.0 Standard Mode |

Rain noise and speech | Heavy noise leakage, syllable suppression (”s” in “so”), and muffled speech at times | Clean voice, noise removed entirely |

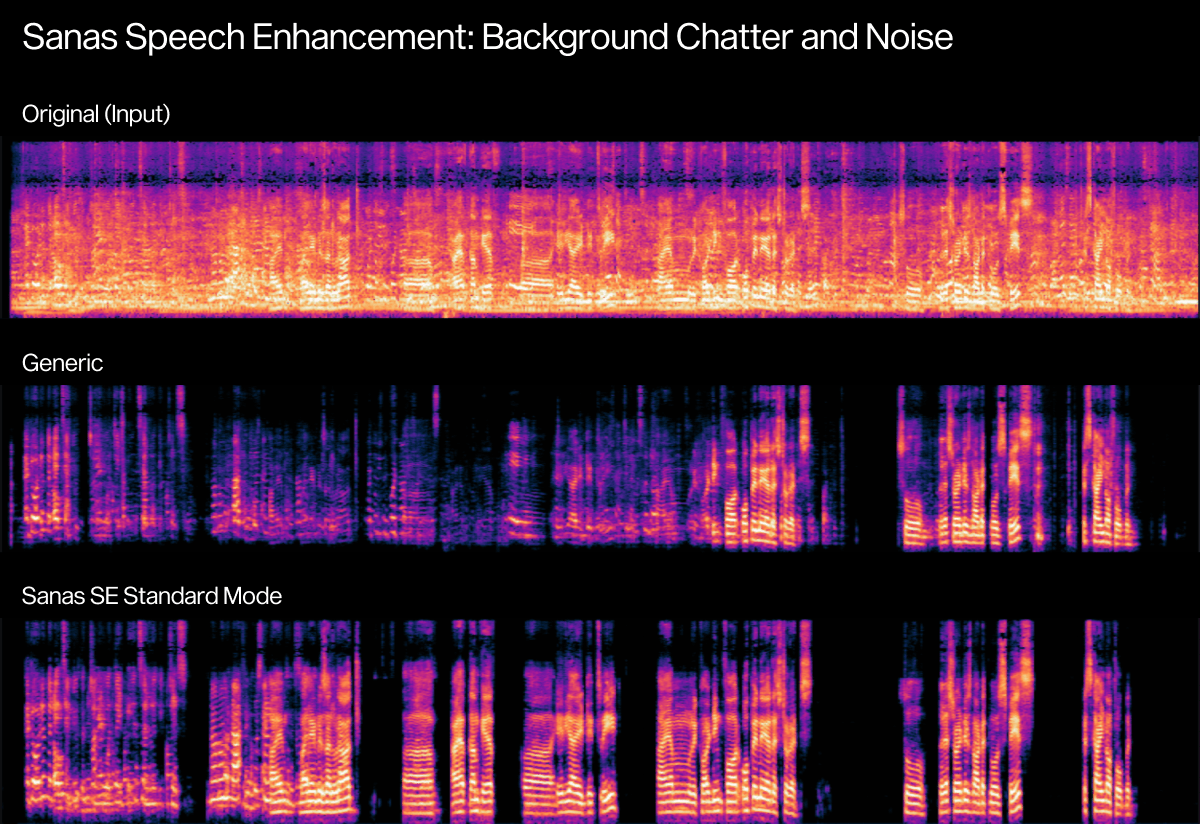

Original (Input) | Generic Noise Cancellation | Sanas SE1.0 Standard Mode |

Background chatter and noise, multiple people in very close proximity to the microphone | Heavy speech deletion and noise leakage | Speakers in close and medium range from the microphone are kept, primary speaker voice is well extracted |

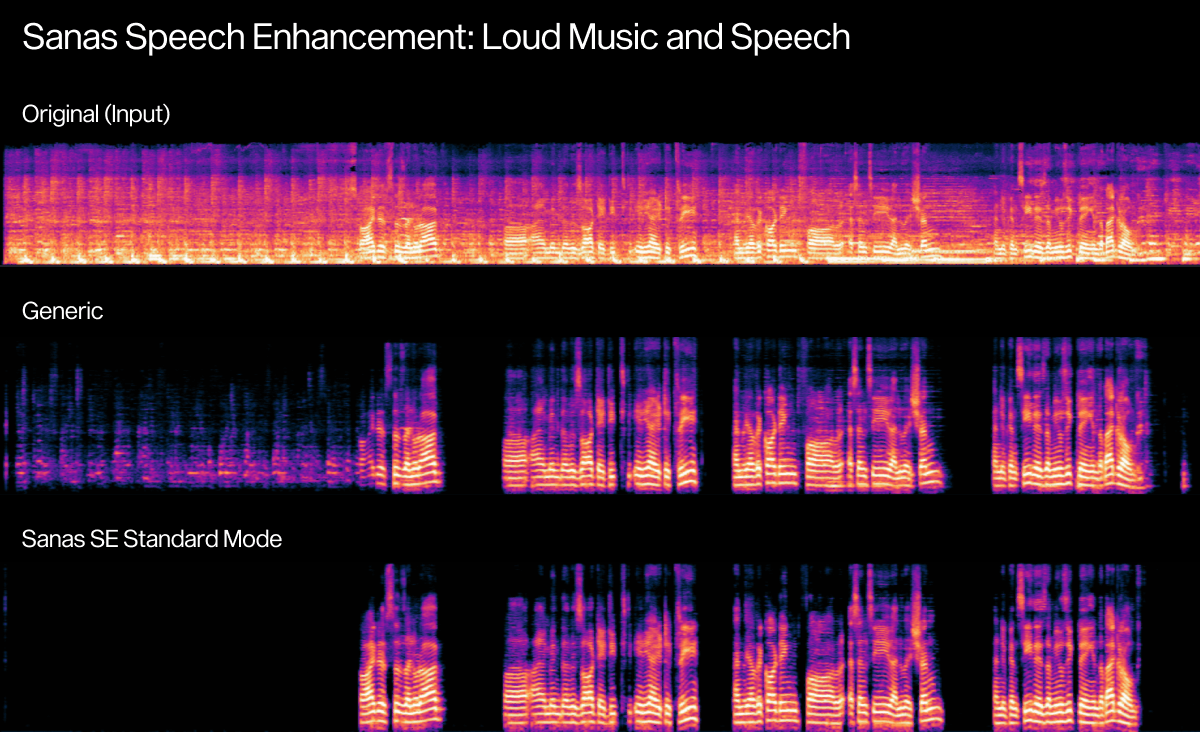

Original (Input) | Generic | Sanas SE1.0 Standard Mode |

Loud music and speech | Heavy music leakage in silence and during speech | Music fully removed and disentangled from speech |

Test 2: Voice Isolation Mode

When competing human speech is the main interference, Speech Enhancement 1.0’s Voice Isolation Mode focuses solely on enhancing the primary speaker.

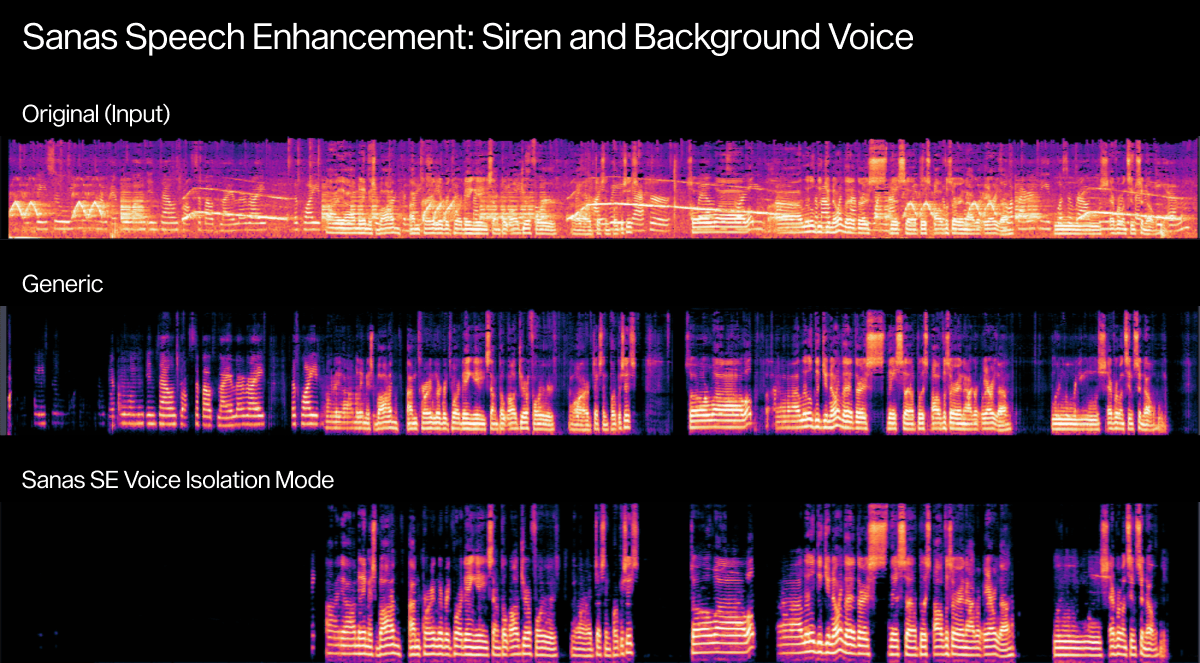

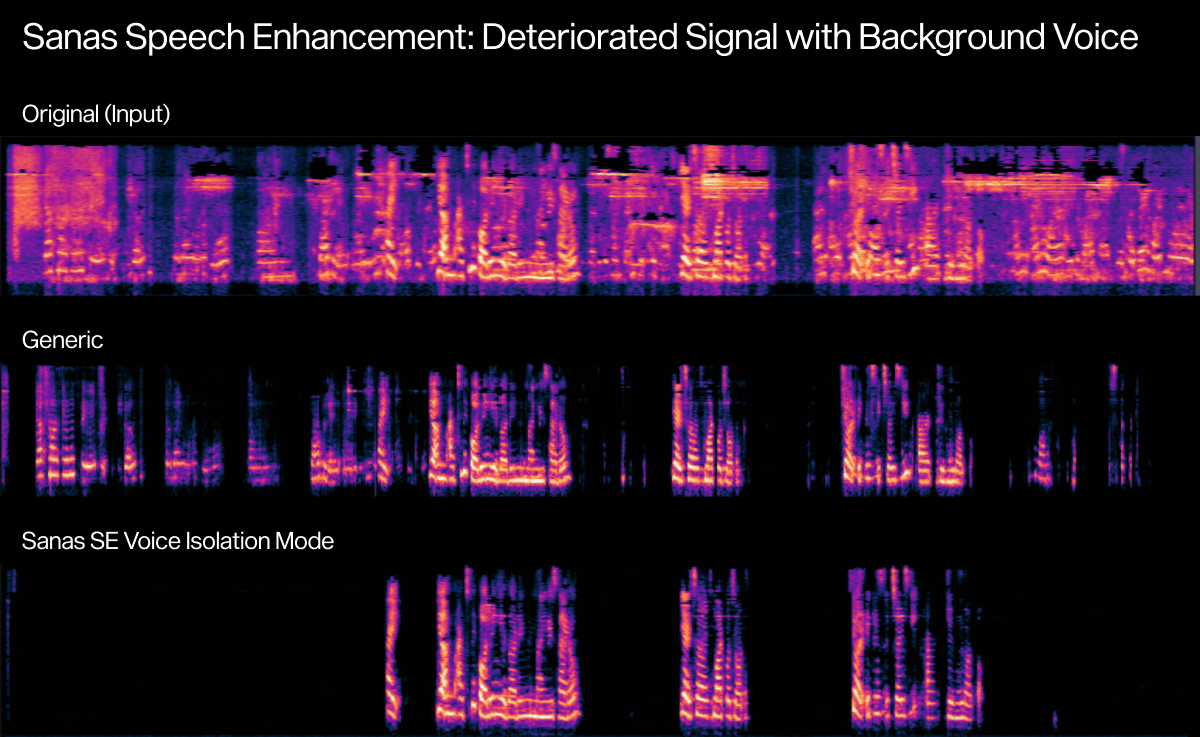

Original (Input) | Generic | Sanas SE1.0 Voice Isolation Mode |

Siren and background voice | Background voice and noise leakage before and during speech, static noise | No background voice, clear primary speaker voice |

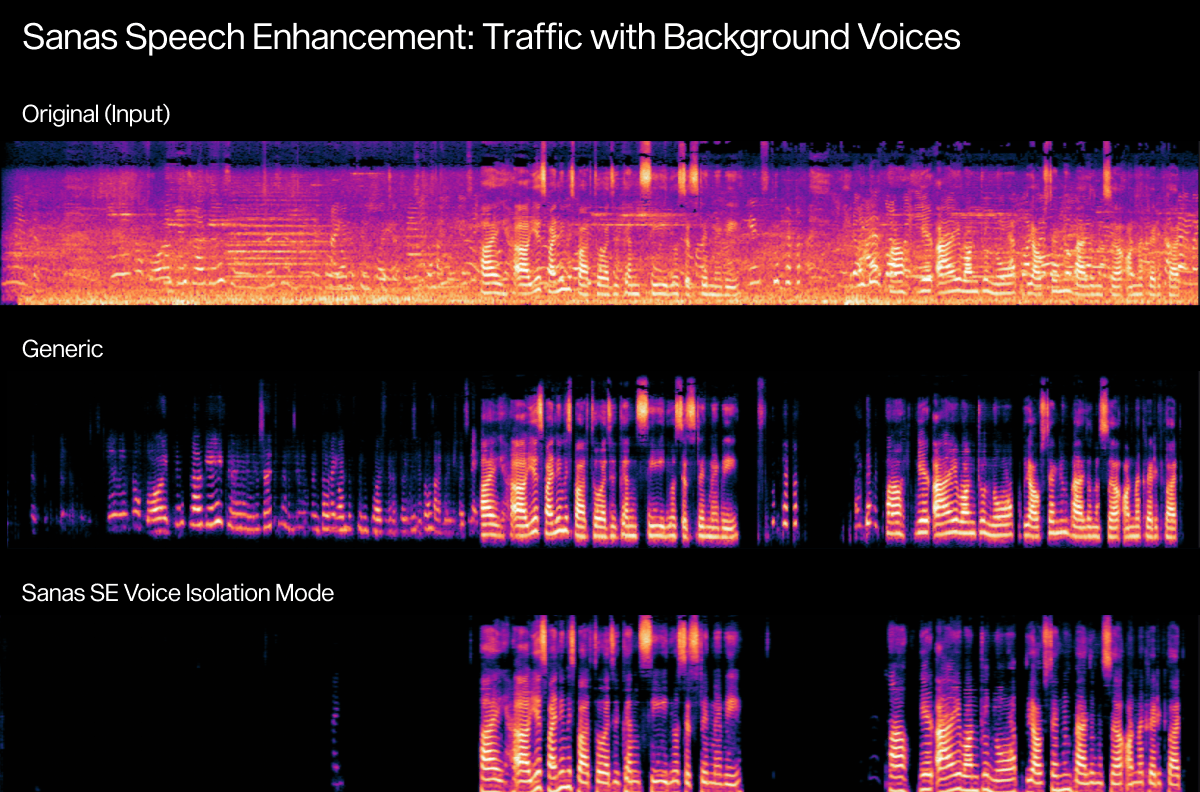

Original (Input) | Generic | Sanas SE1.0 Voice Isolation Mode |

Speaker in traffic with background voices | Voice leakage during and before speech | No background voice leakage |

Original (Input) | Generic | Sanas SE1.0 Voice Isolation Mode |

Deteriorated signal with background voice and noise | Background voice leakage and noise leakage | Clean speech, no background voices |

Across both modes, Speech Enhancement 1.0 consistently achieves higher objective scores and superior perceptual quality in listening tests, validating what users hear in practice. These improvements come without added distortion or latency, demonstrating that high fidelity and real-time performance can truly coexist.

Ultimately, Speech Enhancement 1.0 distinguishes and enhances the nuances of human speech to deliver the clarity, warmth, and realism that make every voice sound natural, no matter the environment.

Looking Ahead: Advancing the Science of Speech

Speech Enhancement 1.0 shows what becomes possible when combining rare and unique skills from our science team, with years of experience, learnings, and feedback, gathered through millions of successful deployments in real business environments.

We have created an advanced deep neural network architecture and a new training paradigm that powers Speech Enhancement 1.0 to generalize across the most challenging conditions.

Voice pipelines now remain stable under unpredictable acoustic shifts without sacrificing low latency or naturalness. For enterprises, this translates into clearer conversations, sharper customer interactions, and more reliable speech transcription even in challenging acoustic conditions. For consumers, it means high-fidelity clarity in everyday moments: walking down a busy street, joining a call from a café, or taking a hands-free meeting in the car without losing intelligibility

Stay tuned as we continue to share the science, engineering choices, and lessons learned as we push the boundaries of what real-time speech AI can do.

Appendix 1 - Methodology and Evaluation Details

To support reproducibility and provide additional context for researchers, developers, and audio professionals, this appendix summarizes the technical framework and objective evaluation metrics used in assessing Sanas Speech Enhancement 1.0.

All Speech Enhancement 1.0 evaluations were conducted using two internally developed test sets composed entirely of real-life recordings collected in diverse acoustic conditions. These datasets reflect the environments most commonly encountered by Sanas users — including open offices, vehicles, restaurants, and contact centers — as well as extreme edge cases that stress-test model resilience.

Each test set contains overlapping speech, background noise, and reverberation representative of real-world communication. Unlike synthetic datasets or clean-speech corpora, these samples capture true acoustic complexity and were used to measure Speech Enhancement 1.0's performance under the same conditions encountered in practice.

Two operating modes were evaluated:

- Standard Mode – tested with an emphasis on background-noise suppression.

- Voice Isolation Mode – tested with an emphasis on background voices suppression.

Appendix 2: Why did we use the ERB scale?

The Mel scale is so widely used that its efficiency is rarely questioned, although there is reason to doubt its relevance above 5 kHz in speech signals. In this frequency region, harmonic components are rare and become too dense to be individually resolved by human hearing. The Mel scale, based on human pitch perception, becomes less accurate in this range because pitch cues are no longer used by the ear. What matters perceptually is the spectral envelope including formant energy, frication noise, and sibilance.

The ERB scale, rooted in biomimetism, approximates how the human cochlea filters sound. It better aligns with how the ear analyzes broadband, noise-like speech cues (such as “s” and “f”), offering a less compressed and more perceptually relevant representation of this region.

Appendix 3: What is the Deep Filtering operation?

Deep Filtering consists of predicting the coefficients of FIR-like filters — one per frequency bin — and convolving the audio signal with them along time steps of the Short-Time Fourier Transform (STFT) instead of time samples, as done in traditional FIR filtering. This provides an efficient and stable way to model time correlations across multiple time steps, effectively capturing the harmonic components that dominate in the frequency range where deep filtering is applied.

Appendix 4: Overview of Objective Quality Metrics

Objective speech-quality metrics are used to quantify perceived clarity, naturalness, and noise suppression performance. Detailed metric definitions are provided below.

NISQA (Non-Intrusive Speech Quality Assessment)

- Paper: NISQA: A Deep Learning Based Non-Intrusive Speech Quality Assessment Model (ArXiv, 2021)

- A deep learning–based non-intrusive model that predicts perceived speech quality (similar to human MOS ratings) by assessing overall clarity, naturalness, and noise suppression.

DNSMOS (Deep Noise Suppression Mean Opinion Score)

Derived from the Microsoft Deep Noise Suppression Challenge:

- ITU-T P.808 Crowdsourcing Framework (ArXiv, 2020)

- DNSMOS Speech, Background Noise, Overall (ArXiv, 2021)

DNSMOS metrics consist of a deep-learning–based set of non-intrusive MOS (Mean Opinion Scores) predictors trained on large-scale P.808 human ratings. It outputs three component scores and one combined score

- DNSMOS_p808: General-purpose MOS predictor corresponding to overall human speech-quality judgments.

- DNSMOS_sig: Evaluates how clean and natural the speech signal itself sounds after enhancement, whether the voice remains clear and undistorted.

- DNSMOS_bak: Measures how well the model suppresses background noise. Higher scores indicate cleaner, quieter backgrounds.

- DNSMOS_ovr: Combines speech and background metrics to reflect overall perceived quality.